Building the Miniverse

I've been experimenting with OpenAI's new functions API recently, mostly in the context of personal automation, which is something I've explored before without the API (more on that in the future). However, something else I thought might be interesting would be to give NPCs in a virtual world a more robust brain, like the recent Stanford paper. This came in part from the thinking from yesterday's post.

The Stanford approach had many layers of complexity and they were attempting to create something that is close to real human behaviour. I'm less interested in that, and would instead like to design an environment with much higher constraints based on simple rules. I think finding the right balance there leads to the most interesting emergent results.

So my first goal was to create a very tightly scoped environment. I decided to start with a 32x32 grid, made of emojis, with 5 agents randomly spawned. The edge of the grid is made of walls so they don't fall off.

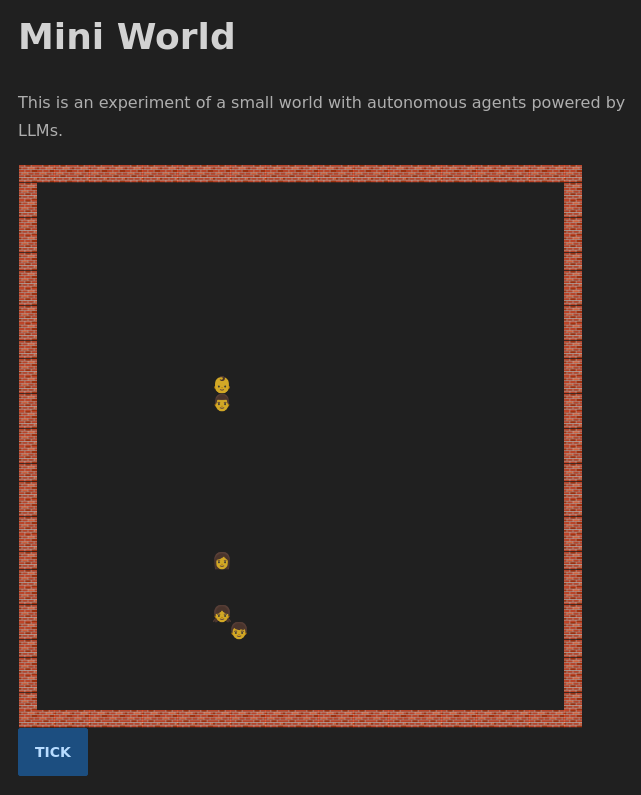

Agents after they had walked towards each other for a chat

Agents after they had walked towards each other for a chat

When I was originally scoping this out, I thought I would add mechanisms for interacting with items too. These items could be summoned in some way perhaps. I built a small API for getting the nearest emoji to item text as well, which is still up at e.g. https://gen.amar.io/emoji/green apple (replace "green apple" with whatever). It also caches the emojis so overall not expensive to run.

I also explored various models for generating emoji-like images, for the more fantastical items, and landed on emoji diffusion. It was at this point that I quickly realised I'm losing control of the scope, and decided to focus on NPCs only, and no items.

Each simulation step (tick) would iterate over all agents and compute their actions. I planned for these possible actions:

- Do nothing

- Step forward

- Step back

- Step left

- Step right

- Say X

- Remember X (doesn’t end round)

I wanted the response from OpenAI to only be function calls, which unfortunately you can't control, so I had to add to the prompt You MUST perform a single function in response to the above information. If I get any bad response, we either retry or fall back to "do nothing", depending.

The prompt contained some basic info, a goal, the agent's surroundings, the agent's memory (a truncated list of "facts"), and information on events of the past round. I found that I couldn't quite rely on OpenAI to make good choices, so I selectively build the list of an agent's capabilities on the fly each tick. E.g. if there's nobody in speaking distance, we don't even give the agent the ability to speak. If there's a wall ahead of the agent, we don't even give the agent the chance to step forward. And if the agent just spoke, they lose the ability to speak again for the following round or else they speak over each other.

I had a lot of little problems like that. Overall, the more complicated the prompt, the more off the rails it goes. Originally, I tried The message from God is: "Make friends" as I envisioned interaction from the user coming in the form of divine intervention. But then some of the agents tried speaking to God and such, so I replaced that with Your goal is: "Make friends", and later Your goal is: "Walk to someone and have interesting conversations" so they don't just walk randomly forever.

They would also feel compelled to try and remember a lot. Often the facts they remembered were quite useless, like the goal, or their current position. The memory was small, so I tried prompt engineering to force them to treat memory as more precious, but it didn't quite work. Similarly, they would sometimes go on endless loops remembering the same useless fact over and over. I originally had all information in their memory (like their name) but I didn't want them to forget their name, so put the permanent facts outside.

Eventually, I removed the remember action, because it really wasn't helping. They could have good conversations, but everything else seemed a bit stupid, like I might as well program it procedurally instead of with LLMs.

I did however focus a lot on having a very robust architecture for this project, and made all the different parts easy to build on. The server does the simulation (in the future, asynchronously, but today, through the "tick" button) and stores world state in a big JSON object that I write to disk so I can rewind through past states. There is no DB, we simply read/write from/to JSON files as the world state changes. The structure of the data is flexible enough that I don't need to modify the schemas, and it can remain pretty forwards-compatible as I make additions, so I can run the server off of older states and it picks up those states gracefully.

Anyway, I'll be experimenting some more and writing up more details on the different parts as they develop!