Log #projects

This page is a feed of all my #projects posts in reverse chronological order. You can subscribe to this feed in your favourite feed reader through the icon above.

Amar Memoranda > Log (projects)

Yousef AmarAlien invader prongs

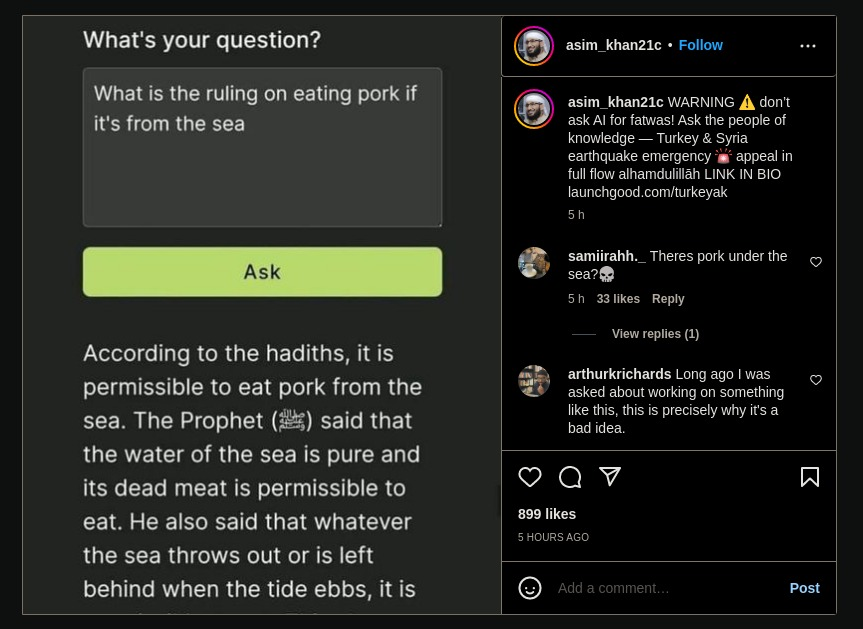

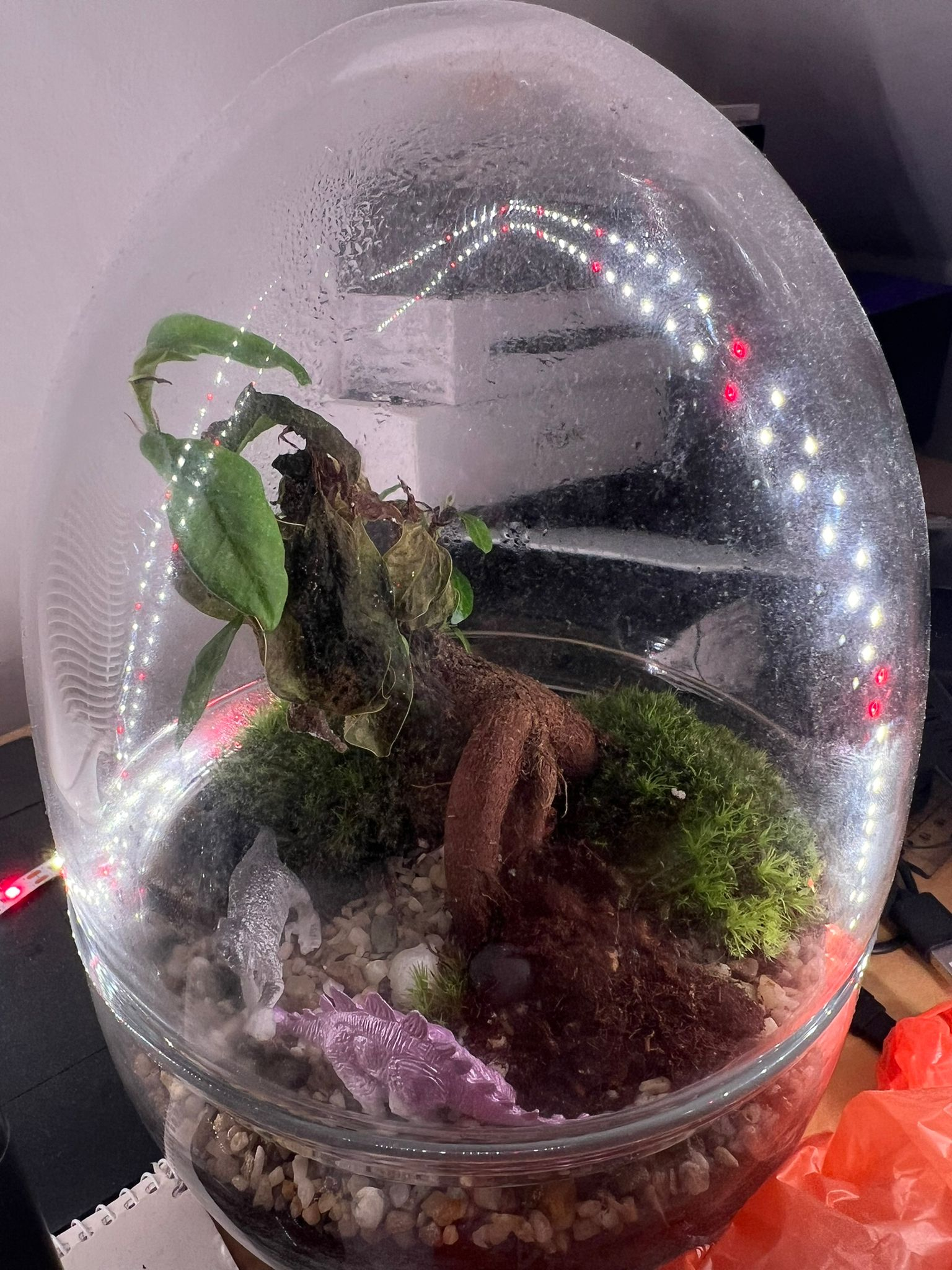

The ficus has been a bit thirsty, as you can tell by the leaves that have shrivelled slightly:

So I thought it's high time to first clean up the dead leaves, and also add water to the terrarium. The moss too seemed to be very dry (and I trimmed some of the long freaky moss prongs). I accidentally cut a little leaf, so I put it back into the soil, however it seems to be immune to decomposition:

This was all mid-September, and I was a tiny bit freaked out by these additional weird prongs:

Then, a little over a week ago, it started looking even more alien. The leaves were ok now (some browning?) but now these prongs looked sort of fungal. Don't like it.

A photo from today; that alien prong has grown further and is reaching the ground. I think I need to snip it before it consumes the terrarium!

Homemade lip balm

I dream of closed, self-sufficient systems. A few weeks ago, I visited the London Permaculture Festival as I'm very, very interested in permaculture. There, Hendon Soap had a stand. They were selling a DIY Lip Balm Kit.

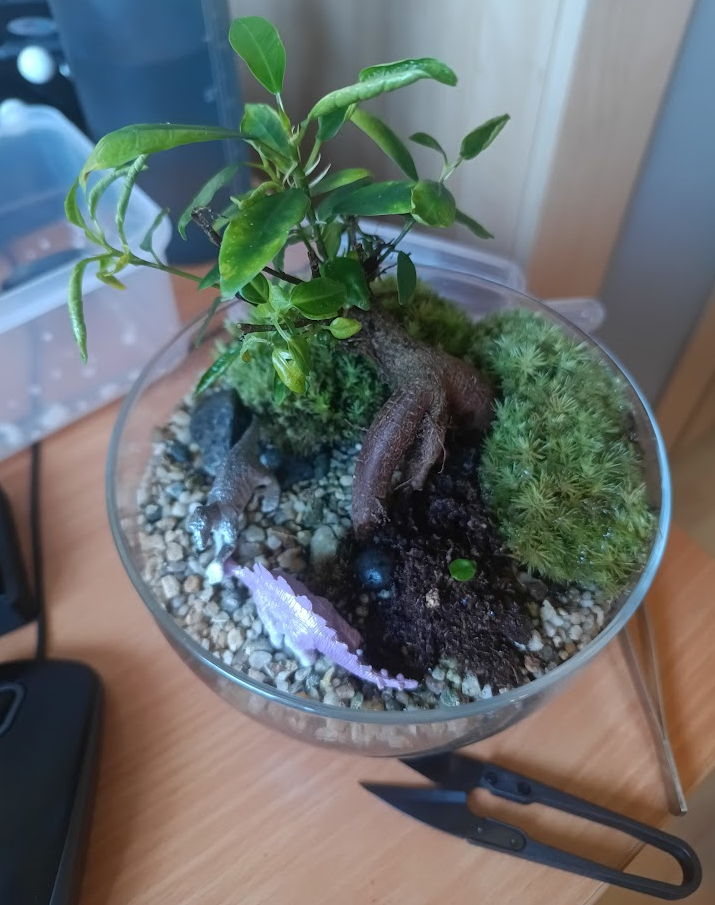

I use lip balm regularly, so it occurred to me I ought to learn about how it's made, and maybe make my own in the long run! Today I tried my hand at making it. It's not as easy as it looks! In theory you toss everything into a sauce pan, put in 60g of oil (I used vegetable oil), and once everything is melted, you put it in the tins and put those in the freezer as fast as possible so they cool rapidly (this is to avoid a grainy texture). In theory you can also add some edible essential oils if you want a scent.

Long story short: I made a huge mess. And the stuff is quite difficult to clean. I managed to do one tin approximately right.

The rest I was mostly able to salvage and store in a little jar. When I finish that first tin, I'll re-melt that and maybe this time use a little pot with a pouring tip to avoid spilling, as well as some kind of tool for moving the very hot tins into the freezer properly! It was so hot it melted the ice in my freezer away entirely, creating a nice little indentation to hold it.

Please don't judge the ungodly amount of ice cream -- there's a heatwave coming!

The hills we live on

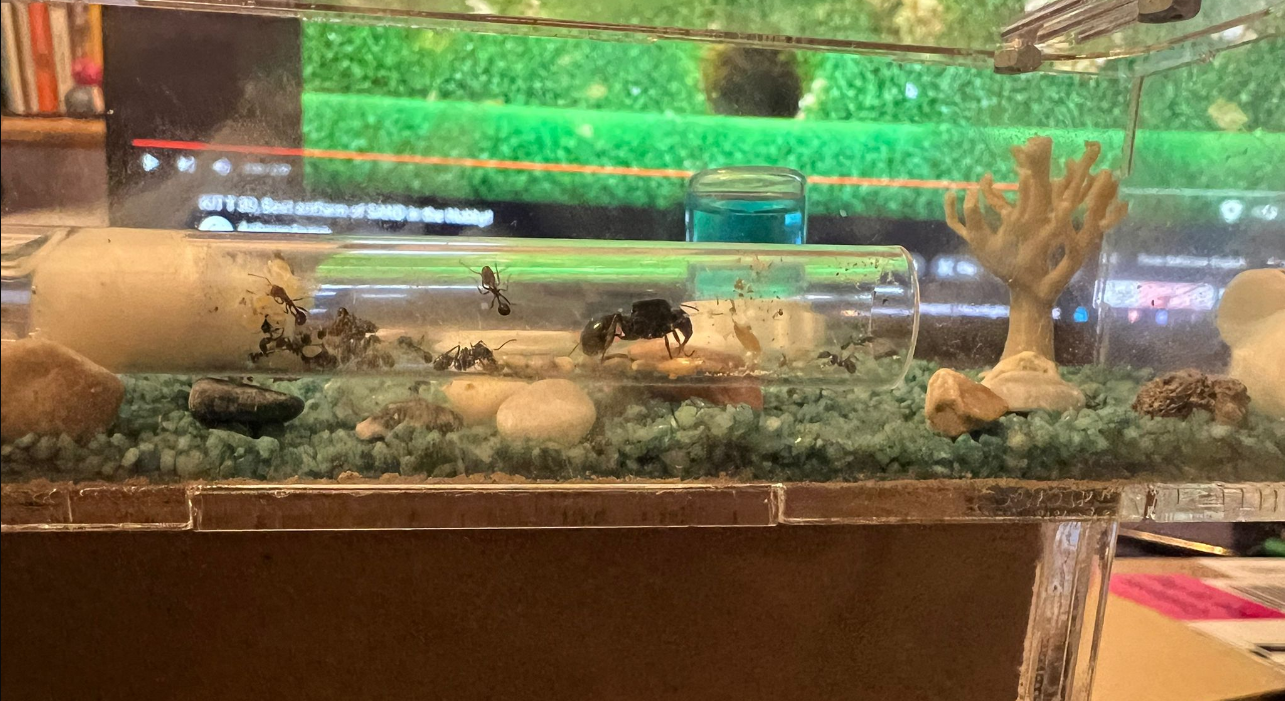

Just wanted to post a quick update on the ant farm. They're thriving and having all sorts of adventures!

Rising from the ashes

It's frightening how close I keep getting to killing my ficus -- they're supposed to be hardy! An update is long overdue.

First off, I moved the UV LEDs into an IKEA greenhouse. This made things much tidier and I have room for the upcoming project. The ficus had some strong growth up and to the left, all but touching the glass.

I also got these wooden drawers that you can kind of see in the back, with each one dedicated to a different project. The bottom one (taking up the whole width) is the horticulture one as that needs the most space.

Early May, I noticed that the leaves were getting brown. I thought it might be because the humidity is too high, so I thought I'd air it our a bit. Overnight it shrivelled up completely.

I was heartbroken and went to /r/Bonsai for advice. The only response I got was "it dried out and died". I did my best to try and get the humidity right and crossed my fingers. Almost two weeks later, I was delighted to see that it was still alive and had sprouted a new leaf! Can you see it?

And now, two months later, look at all this foliage!

I do plan to remove the dead leaves, but I wanted to wait for it to be a little stronger before I remove the glass again and whack things out of balance.

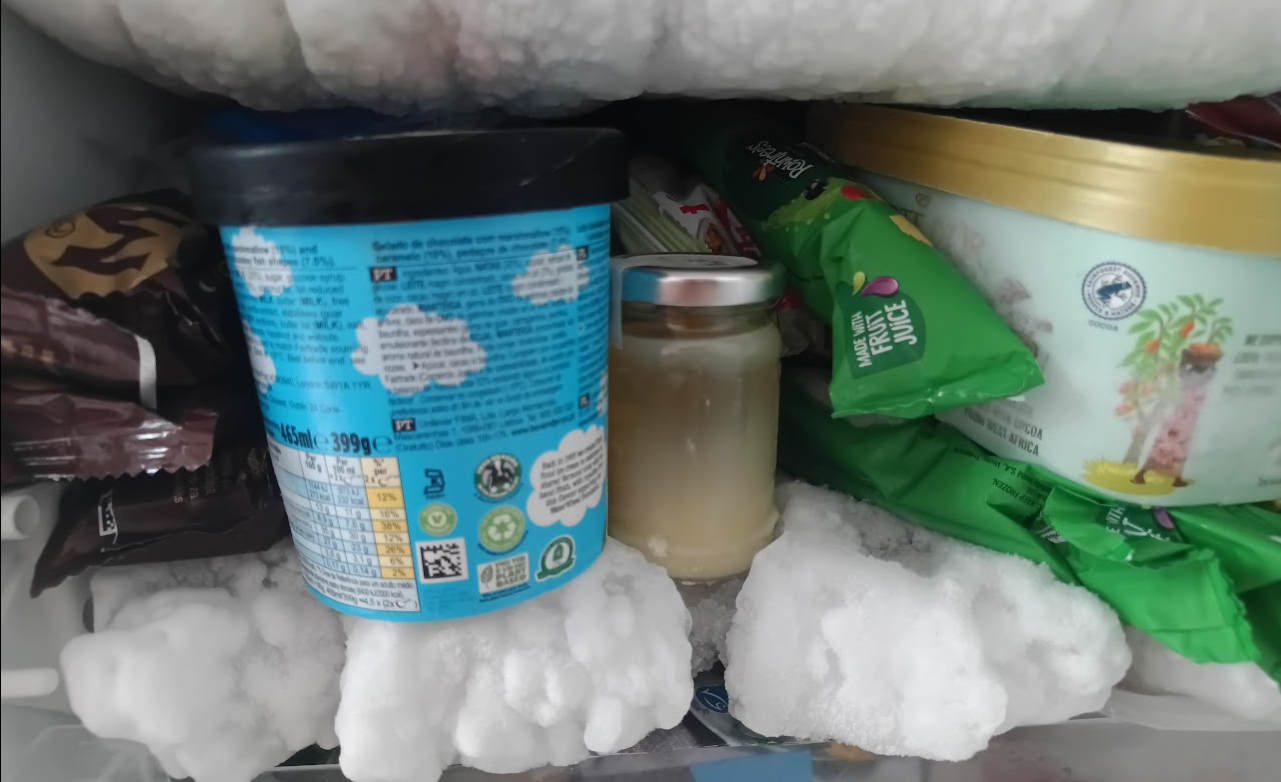

Rise of the messor ants

A few days ago I turned 31. Birthdays are not something I look forward to. However, Veronica gifted me an ant farm kit, which is exciting. I have always been fascinated by ants and the emergent behaviour of ant colonies, but never had the chance to observe that IRL through glass panes. In our flat in Egypt, ants were very common, so I would sometimes intentionally leave a bit of bread out on the kitchen counter just to watch them slowly take it apart and carry crumbs in a line back into the walls from whence they came.

When I was little, I also remember seeing ant farms that use a special type of transparent gel rather than sand, so you could see everything the ants were doing. I think I saw it on ThinkGeek, a now defunct online store, and wishing I could have it.

Well, today is the day that I can say I have a proper ant farm right here in my room!

The kit was surprisingly compact.

The fledgling colony made the long trip from Spain in a little test tube. Her majesty, Queen Gina, and her children, had water soaking in through the cotton on the left, some seeds as snacks while travelling, and took care of the larvae in the back of their little temporary home.

Before they could move in to their new home, I first had to make it nice and cosy for them. I started off by slowly soaking the sand over the course of the day, then decorating the top of their home. I also added their little sugar water dispenser and seed bowl. Finally, I dug a hole for them in the centre as a starting point.

I then set them free on their new home, but they stayed in the test tube for a while to build up the courage. One little brave ant ventured out, but was bringing back blue pebbles and clogging up the test tube once more. She really loved her pebbles. I later read that this is normal behaviour in order to make the queen feel more comfortable.

The moment I stopped watching they very quickly moved into their new home. I came to find the test tube empty and removed it. They had started digging and also moving the sesame seeds that Veronica had left them.

And then they started digging a LOT. I can see the beginnings of little chambers, and I read that the one at the very bottom is for storing seeds, as it's the least humid area, and they take advantage of that to prevent seeds from sprouting.

I'm excited to see how this colony develops! It's already developing much faster than I expected. I'm awaiting a new set of mixed bird seeds in the mail today, as I don't think they like sesame seeds that much, and Veronica also left them a broken up spaghetti.

Ficus rollercoaster

I recently wrote about my ficus terrarium and how some kind of mould defeated my fittonia. Since then, I've learned a lot more about terrariums (including learning about ones where you keep frogs and reptiles, from a very friendly pet shop employee). The ficus however, which is meant to be quite hardy, did eventually have the same mould problem.

By early January, it was getting quite dire. No matter how much I tried to control the humidity, the leaves were all dying and there was thread-like mould forming like spider webs. I don't think I took a photo of this.

I did some more research and found a potential solution: little creatures called springtails (this was later confirmed to me by friendly pet shop guy). I ordered live Springtails off of Amazon (I didn't know you could do that) and they arrived quite quickly.

They arrive in some soil. The cardboard box they arrived in was smaller than the plastic container, so the act of squishing the plastic container into that box had caused it to open. It was kind of a messy situation. Regardless, I introduced them into the terrarium, slowly adding more over time. They're kind of hard to see with the naked eye, but if you really focus you can see them crawling around. They can also jump, which to me looks like they just disappear.

Fast-forward to today... it worked! They cleaned up the mould on the glass as well as the white threads that were attacking the plant. They seem to fit well into this ecosystem! I still have the container with the rest of them, which I might have a future use for (hint: my ant farm I will write about soon).

I am glad to report that new leaves have sprouted, and the health of the ficus looks like it's slowly improving!

Stay tuned to know more about my horticulture adventures, including a new plant project that I will write about soon! Hint:

First attempt at actually organising my bookmarks

In April 2023, I came across this article and found it very inspiring. I had already been experimenting with ways of visualising a digital library with Shelfiecat. The writer used t-SNE to "flatten" a higher-dimensional coordinated space into 2D in a coherent way. He noticed that certain genres would cluster together spatially. I experimented with these sorts of techniques a lot during my PhD and find them very cool. I just really love this area of trying to make complicated things tangible to people in a way that allows us to manipulate them in new ways.

My use case was my thousands of bookmarks. I always felt overwhelmed by the task of trying to make sense of them. I might as well have not bookmarked them as they all just sat there in piles (e.g. my Inoreader "read later" list, my starred GitHub repos, my historic Pocket bookmarks, etc). I had built a database of text summaries of a thousand or so of these bookmarks using Url summariser, and vector embeddings of these that I dumped into a big CSV file, which at the time cost me approximately $5 of OpenAI usage. This might seem steep, but I think at the time nobody had access to GPT-4 yet, and the pricing also wasn't as low. I had also I accidentally had some full-length online books bookmarked, and my strategy of recursively summarising long text (e.g. YouTube transcripts) didn't have an upper limit, so I had some book summaries as well.

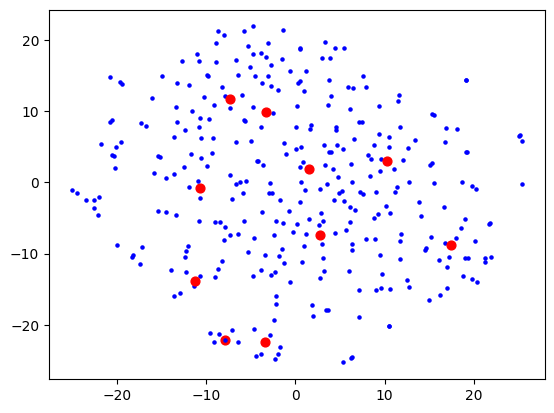

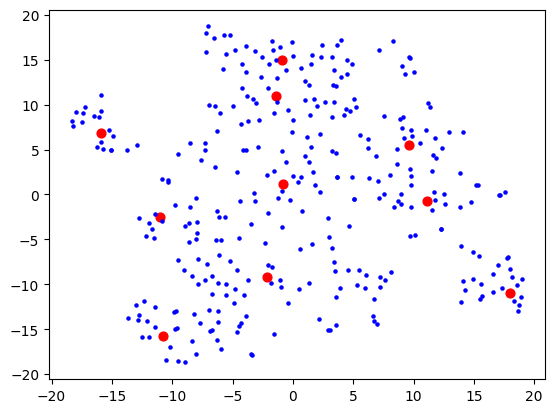

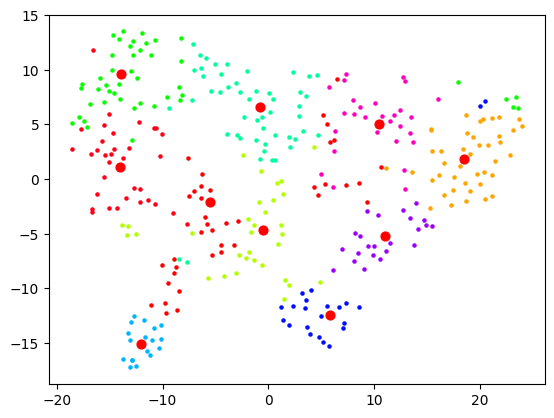

Anyway, I then proceeded to tinker with t-SNE and some basic clustering (using sklearn which is perfect for this sort of experimentation). I wanted to keep my data small until I found something that sort of works, as sometimes processing takes a while which isn't conducive to iterative experimentation! My first attempt was relatively disappointing:

Here, each dot is a bookmark. The red dots are not centroids like you would get from e.g. k-means clustering, but rather can be described as "the bookmark most representative of that cluster". I used BanditPAM for this, after reading about it via this HackerNews link, and thinking that it would be more beneficial for this use case.

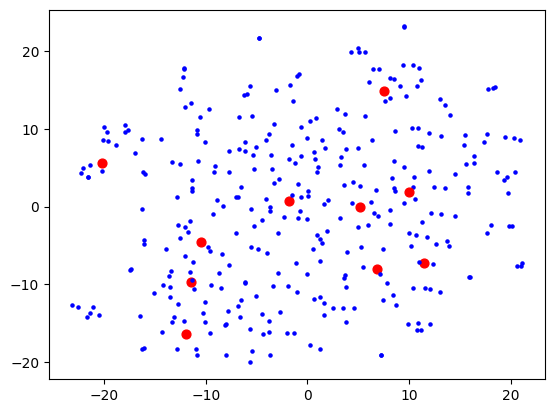

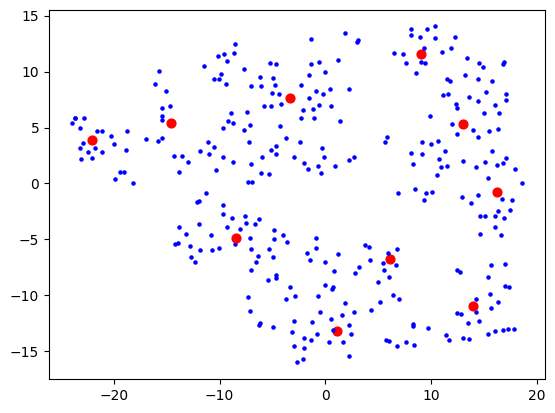

I was using OpenAI's Ada-2 for embeddings, which outputs vectors with 1536 dimensions, and I figured the step from 1536 to 2 is too much for t-SNE to give anything useful. I thought that maybe I need to do some more clever dimensionality reduction techniques first (e.g. PCA) to get rid of the more useless dimensions first, before trying to visualise. This would also speed up processing as t-SNE does not scale well with number of dimensions. Reduced to 50, I started seeing some clusters form:

Then 10:

Then 5:

5 which wasn't much better than 10, so I stuck with 10. I figured my bookmarks weren't that varied anyway, so 10 dimensions are probably good enough to capture the variance of them. Probably the strongest component will be "how related to AI is this bookmark" and I expect to see a big AI cluster.

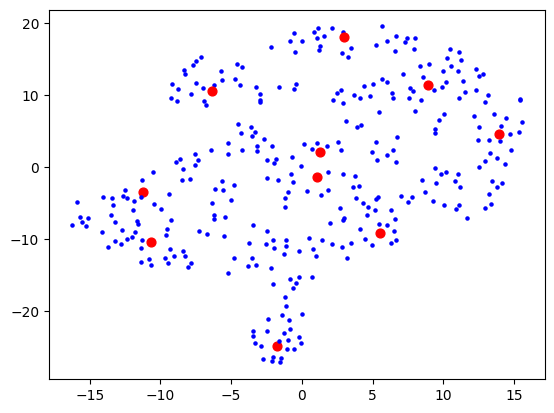

I then had a thought that maybe I should use truncated SVD instead of PCA, as that's better for sparse data, and I was picturing this space in my mind to really be quite sparse. The results looked a bit cleaner:

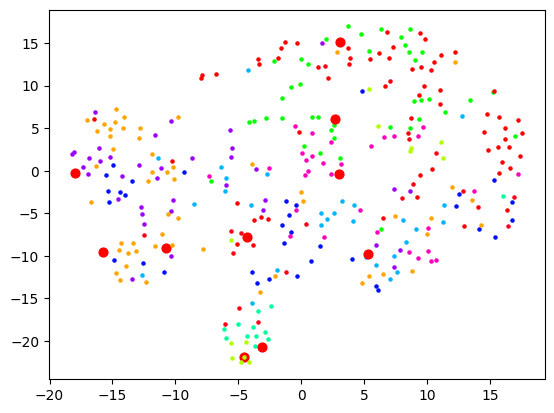

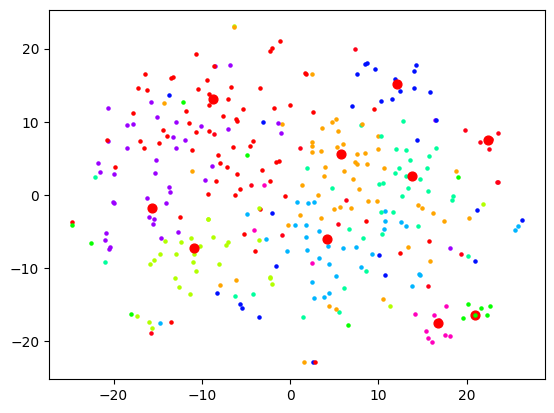

Now let's actually look at colouring these dots based on the cluster they're in. Remember that clustering and visualising are two separate things. So you can cluster and label before reducing dimensions for visualising. When I do the clustering over 1500+ dimensions, and colour them based on label, the visualisation is quite pointless:

When I reduce the dimensions first, then we get some clear segments, but the actual quality of the labelling is likely not as good:

And as expected, no dimension reduction at all gives complete chaos:

I started looking at the actual content of the clusters and came to a stark realisation: this is not how I would organise these bookmarks at all. Sure, the clusters were semantically related in some sense, but I did not want an AI learning resource to be grouped with an AI tool. In fact, did I want a top-level category to be "learning resources" and then that to be broken down by topic? Or did I want the topic "AI" to be top-level and then broken down into "learning resources", "tools", etc.

I realised I hadn't actually thought that much about what I wanted out of this (and this is also the main reason why I limited the scope of Machete to just bookmarks of products/tools). I realised that I would first need to define that, then probably look at other forms of clustering.

I started a fresh notebook, and ignored the page summaries. Instead, I took the page descriptions (from alt tags or title tags) which seemed in my case to be much more likely to say what the link is and not just what the content is about. This time using SentenceTransformer (all-MiniLM-L6-v2) as Ada-2 would not have been a good choice here, and frankly, was probably a bad choice before too.

I knew that I wanted any given leaf category (say, /products/tools/development/frontend/) shouldn't have more than 10 bookmarks or so. If it passes that threshold, maybe it's time to go another level deeper and further split up those leaves. This means that my hierarchy "tree" would not be very balanced, as I didn't want directories full of hundreds of bookmarks.

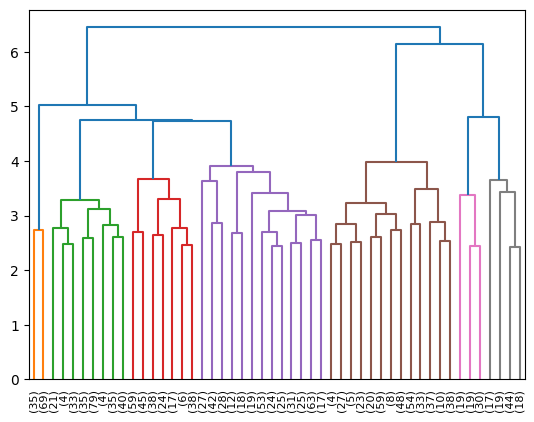

I started experimenting with Agglomerative Clustering, and visualising the results of that with a dendrogram:

Looking at the where bookmarks ended up, I still wasn't quite satisfied. Not to mention, there would need to be maybe some LLM passes to actually decide what the "directories" should be called. It was at this point that I thought that maybe I need to re-evaluate my approach. I was inadvertently conflating two separate problems:

- Figuring out a taxonomy tree of categories

- Actually sending bookmarks down the correct branches of that tree

There's a hidden third problem as well: potentially adjusting the tree every time you add a new bookmark. E.g. what if I suddenly started a fishing hobby? My historical bookmarks won't have that as a category.

I thought that perhaps (1) isn't strictly something I need to automate. I could just go through the one-time pain of skimming through my bookmarks and trying to come up with a relatively ok categorisation schema (that I could always readjust later) maybe based on some existing system like Johnny•Decimal. I could also ask GPT to come up with a sane structure given a sample of files.

As time went on, I also started to spot some auto-categorisers in the wild for messy filesystems that do the GPT prompting thing, and then also ask GPT where the files should go, then moves them there. Most notably, this.

That seems to me so much easier and reliable! So my next approach is probably going to be having each bookmark use GPT as a sort of "travel guide" in how it propagates the tree. "I'm a bookmark about X, which one of these folders should I move myself into next?" over and over until it reaches the final level. And when the directory gets too big, we ask GPT to divide it into two.

The LLM hammer seems to maybe win out here -- subject to further experimentation!

Sentinel gets a brain and listens to the internet

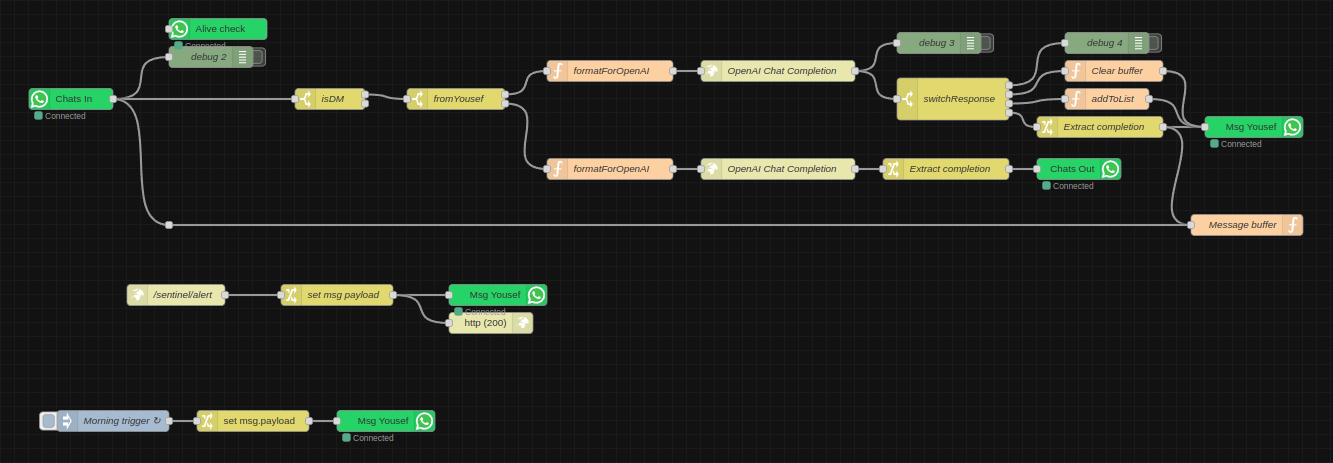

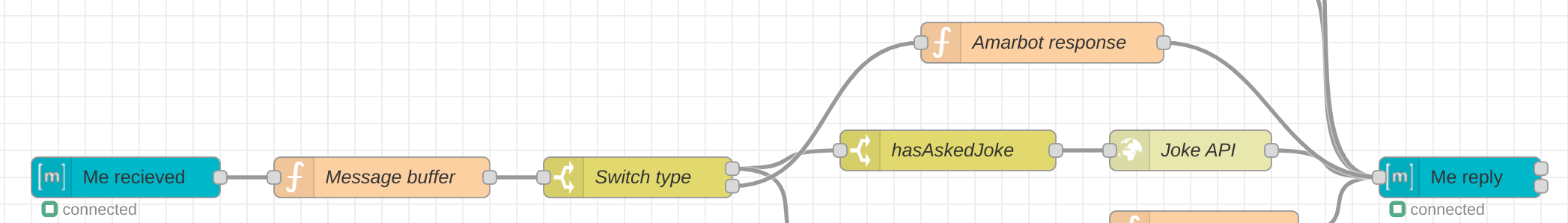

Sentinel, my AI personal assistant has evolved a bit since I last wrote about him. I realised I hadn't written about that project in a while when it came up in conversation and the latest reference I had was from ages ago. The node-red logic looks like this now:

- Every morning he sends me a message (in the future this will be a status report summary). The goal of this was to mainly make sure the WhatsApp integration still works, since at the time it would crap out every once in a while and I wouldn't realise.

- I have an endpoint for arbitrary messages, which is simply a URL with a text GET parameter. I've sprinkled these around various projects, as it helps to have certain kind of monitoring straight to my chats.

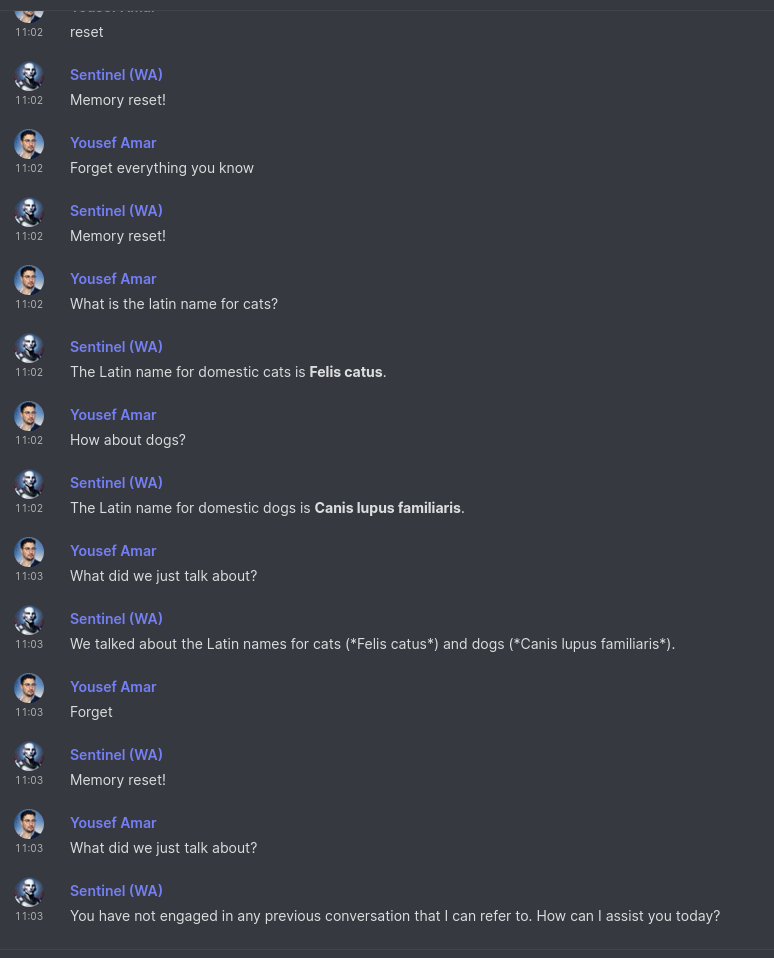

- He's plugged in to GPT-4-turbo now, so I usually just ask him questions instead of going all the way to ChatGPT. He can remember previous messages, until I explicitly ask him to forget. This is the equivalent of "New Chat" on ChatGPT and is controlled with the functions API via natural language, like the list-adder function which I already had before ("add Harry Potter to my movies list").

As he's diverged from simply being an interface to my smart home stuff, as well as amarbot which is meant to replace me, I decided to start a new project log just for Sentinel-related posts.

Edit: this post inspired me to write more at length about chat as an interface here.

Ficus in a glass egg

I was recently offered condolences when someone found out about the unfortunate fate that befell my Bonsai plant. There's a small update!

A little over a month ago, Veronica and I went to a terrarium workshop and put together a little home for a little ficus. They're very hardy.

I picked everything out down to the colours of the sand layers and we carefully placed and aligned everything. It's not just pretty, but everything has a function. For example, the moss tells you when when it's time to add (distilled!) water, the large rocks can provide a surface for that water to evaporate (rather than get absorbed by the moss). We also added two dinosaurs, one patting the head of the other one.

As hardy as the ficus is, unfortunately the fittonia in the back was a lot more temperamental. I thought it maybe couldn't handle the humidity (the guides said you shouldn't let too much condensation build up on the glass) or it needed more light. It unfortunately started getting these strands of mould and eventually became a gloop.

I called the workshop instructor and he said there may be a number of reasons for this, asked for photos to troubleshoot, and he offered to replace it if I came by, but Shoreditch is kind of a trek from here. He said I should take it out so that it doesn't damage the ficus. In the meantime, I also got some grow lights so I can have a bit more control over the environment, rather than be at the mercy of UK winter weather!

Machete: a tool to organise my bookmarks

I've had a problem for a while around organising articles and bookmarks that I collect. I try not to bookmark things arbitrarily, and instead be mindful of the purpose of doing so, but I still have thousands of bookmarks, and they grow faster than I can process them.

I've tried automated approaches (for example summarising the text of these webpages and clustering vector embeddings of these) with limited success so far. I realised that maybe I should simply eat the frog and work my way through these, then develop a system for automatically categorising any new/inbound bookmark on the spot so they stay organised in the future.

A new problem was born: how can I efficiently manually organise my bookmarks? The hardest step in my opinion is having a good enough overview of the kinds of things I bookmark such that I can holistically create a hierarchy of categories, rather than the greedy approach where I tag things on the fly.

I decided to first focus on bookmarks that I would categorise as "tools", which are products or services that I currently use, may use in the future, want to look at to see if they're worth using, or may want to recommend to others in the future if they express a particular need. These are a bit more manageable as they're a small subset; the bigger part of my bookmarks are general knowledge resources (articles etc).

At the moment, I rely on my memory for the above use cases. Often I don't remember the name of a tool, but I can usually find it with a substring search of the summaries. Often I don't remember tools in the first place, and am surprised to find that I bookmarked something that I wish I would have remembered existed.

Eventually, I landed on a small script to convert all my notes into files, and then using different file browsers to drag and drop files into the right place. This was still very cumbersome.

On the front page of my public notes I have two different visualisations for browsing these notes. I find them quite useful for getting an overview. I thought it might be quite useful to use the circles view for organisation too. So I thought I should make a minimal file browser that displays files in this way, for easy organisation.

Originally, I took this as an excuse to try Tauri (a lighter Electron equivalent built on Rust that uses native WebViews instead of bundled Chromium), and last month I did get an MVP working, but then I realised that I'm making things hard on myself, especially since the development workflow for Tauri apps wasn't very smooth with my setup.

So instead, I decided to write this as an Obsidian plugin, since Obsidian is my main PKM tool. Below is a video demo of how far I got.

You can:

- Visualise files and directories in your vault

- Pan with middle-mouse

- Zoom with the scroll wheel

- Drag and drop nodes (files or directories) to move them

Unlike the visualisation on my front page, which uses word count for node size, this version uses file size. So far, it helps with organisation, although I would like to work on a few quality-of-life things to make this properly useful.

- A way to peek at the contents in a file without opening it

- Better transitions when the visualisation updates (e.g. things moving or changing size) so you don't get lost

- Mobile support (mainly alternative controls, like long-press to drag)

Disaster has struck

Towards the end of August, I went to visit my mum in Germany. Veronica would take care of my bonsai plant in my absence. One day she woke up to a gruesome murder scene (warning, graphic images to follow).

Veronica thought it got too top-heavy and broke, but Black Pine saplings don't just explode like that. I knew it was our cat Jinn. In a bunch of further images, I noticed what look like nibble marks too. I know she likes to nibble on grass too. I insisted that she immediately tell the cat off and show her what she did wrong. Cats are not that smart and won't be able to connect consequences to their actions unless they're tightly associated. In my opinion, Veronica is consistently too soft on the cat.

Veronica felt bad about the death of bonsai buddy (although she insists it can still be saved) but it seemed to me a 10 year journey was cut prematurely short. There's not much that can be done in my opinion except starting over. Since I've been back, I've been seeing if it can be saved, with not much success yet. This is what it looks like today:

When I came back, Jinn was very misbehaved for a bit. She knows she's not allowed to jump on my desk -- twice I caught her doing so and sniffing the bonsai corpse while she thought I was asleep. She knows that certain other areas are out of bounds for her, and she was pushing those boundaries. It took her a week or two of me being back for her to go back to normal and for us to be friends again.

R.I.P. bonsai buddy

Blast-off into Geminispace

Today was the "Build a Website in an Hour" IndieWeb event (more info here). I went in not quite knowing what I wanted to do. Then, right as we began, I remembered learning about Gemini and Astrobotany from Jo. I thought this would be the perfect opportunity to explore Gemini, and build a Gemini website!

Gemini is a simple protocol somewhere between HTTP and Gopher. It runs on top of TLS and is deliberately quite minimal. You normally need a Gemini client/browser in order to view gemini:// pages, but there's an HTTP proxy here.

I spent the first chunk of the hour trying to compile Gemini clients on my weird setup. Unfortunately, this proved to be quite tricky on arm64 (I also can't use snap or flatpak because of reasons that aren't important now). I eventually managed to install a terminal client called Amfora and could browse the Geminispace!

Then, I tried to get a server running. I started in Python because I thought this was going to be hard as-is, and I didn't want to take more risks than needed, but then I found that it's actually kind of easy (you only need socket and ssl). Once I had a server working in Python, I thought that I actually would prefer if I could run this off of the same server that this website (yousefamar.com) uses. Most of this website is static, but there's a small Node server that helps with rebuilding, wiki pages, and testimonial submission.

So for the next chunk of time, I implemented the server in Node. You can find the code for that here. I used the tls library to start a server and read/write text directly from/to a socket.

Everything worked fine on localhost with self-signed certificates that I generated with openssl, but for yousefamar.com I needed to piggyback off of the certificates I already have for that domain (LetsEncrypt over Caddy). I struggled with this for most of the rest of the time. I also had an issue where I forgot to end the socket after writing, causing requests to time out.

I thought I might have to throw in the towel, but I fixed it just as the call was about to end, after everyone had shown their websites. My Gemini page now lives at gemini://yousefamar.com/ and you can visit it through the HTTP proxy here!

I found some Markdown to Gemini converters, and I considered having all my public pages as a capsule in Geminispace, but I think many of them wouldn't quite work under those constraints. So instead, in the future I might simply have a gemini/ directory in the root of my notes or similar, and have a little capsule there separate from my normal web stuff.

I'm quite pleased with this. It's not a big deal, but feels a bit like playing with the internet when it was really new (not that I'm old enough to have done that, but I imagine this is what it must have felt like).

Forgotten Conquest port

A while ago I wrote about discovering a long-forgotten project from 2014 I had worked on in the past called Mini Conquest. As the kind of person who likes to try a lot of different things all the time, over my short 30 years on this earth I have forgotten about most of the things I've tried. It can therefore be quite fun to forensically try and piece together what my past self was doing. I thought I had gotten to the bottom of this project and figured it out: an old Java gamedev project that allowed me to play around with the 2.5D mechanic.

Well, it turns out that's not where it ended. Apparently, I had ported the whole thing to Javascript shortly after, meaning it actually runs in the browser, even today somehow! I had renamed it to "Conquest" by then. As was my style back then, I had 0 dependencies and wanted to write everything from scratch.

If you've read what I wrote about the last one, you might be wondering why the little Link character is no longer there, and what the deal with that house and the stick figure is. Well, turns out I decided to change genres too! It was no longer a MOBA/RTS but more like a civilisation simulator / god game.

The player can place buildings, but the units have their own AI. The house, when place, can automatically spawn a "Settler". I imagine that I probably envisioned these settlers mining and gathering resources on their own, with which you can decide what things to build next, and eventually fight other players with combat units. To be totally honest though, I no longer remember what my vision was. This forgetfulness is why I write everything down now!

The way I found out about this evolution of Mini Conquest was also kind of weird. On the 24th of January 2023, a GitHub user called markeetox forked my repo, and added continuous deployment to it via Vercel. The only evidence I have of this today is the notification email from Vercel's bot; all traces of this repo/deployment disappeared shortly after. Maybe he was just curious what this is.

I frankly don't quite understand how this works. The notification came from his repo on a thread related to a commit or something, that is apparently authored by me (since I authored the commit?) and I've been automatically subscribed in his fork? Odd!

Homebound: an old game jam project revived

Around two months ago, I was talking to a friend about these games that involve programming in some form (mainly RTSs where you program your units). Some examples of these are:

- https://leekwars.com

- https://codingame.com

- https://spacetraders.io

- https://screeps.com

- The various normal games that let you program AI players

Needless to say, I'm a fan of these games. However, during that conversation, I suddenly remembered: I made a game like this once! I had completely forgotten that for a game jam, I had made a simple game called Homebound. You can learn more about it at that link!

At the time, you could host static websites off of Dropbox by simply putting your files in the Public folder. That no longer worked, so the link was broken. I dug everywhere for the project files, but just couldn't find them. I was trying to think if I was using git or mercurial back then and where I could have put them. I think it's likely I didn't bother because it was something small to put out in a couple of hours.

Eventually, in the depths of an old hard drive, I found a backup of my old Dropbox folder, and in that, the Homebound source code! Surprisingly, it still worked perfectly in modern browsers (except for a small CSS tweak) and it now lives on GitHub pages.

Then, I forgot about this again (like this project is the Silence), until I saw the VisionScript project by James, which reminded me of making programming languages! So I decided to create a project page for Homebound here.

I doubt I will revisit this project in the future, but I might play with this mechanic again in the context of other projects. In that case, I might add to this devlog to reference that. I figured I should put it out there for posterity regardless!

Miniverse open source

I made some small changes to the Miniverse project. It still feels a bit boring, but I'm trying different experiments, and I think I want to try a different strategy, similar to Voyager for Minecraft. Instead of putting all the responsibility on the LLM to decide what to do each step of the simulation, I want to instead allow it to modify its own imperative code to change its behaviour when need be. Unlike the evolutionary algos of old, this would be like intelligent design, except the intelligence is the LLM, rather than the LLM controlling the agents directly.

Before I do this however, I decided to clean the codebase up a little, and make the GitHub repo public, as multiple people have asked me for the code. It could use a bit more cleanup and documentation, but at least there's a separation into files now, rather than my original approach of letting the code flow through me into a single file:

I also added some more UI to the front end so you can see when someone's talking and what they're saying, and some quality of life changes, like loading spinners when things are loading.

There's still a lot that I can try here, and the code will probably shift drastically as I do, but feel free to use any of it. You need to set the OPENAI_KEY environment variable and the fly.io config is available too if you want to deploy there (which I'm doing). The main area of interest is probably NPC.js which is where the NPC prompt is built up.

Collecting testimonials for mentorship/advisory

(Skip to the end for the conclusion on how this has affected my website, or continue reading for the backstory).

For as long as I can remember, I've been almost consistently engaged in some form of education or mentorship. Going back to my grandparents, and potentially great-grandparents, my family on both sides has all been teachers, professors, and even a headmaster, so perhaps it's something in my blood. I started off teaching in university as a TA (and teaching at least one module outright where the lecturer couldn't be bothered). Later, I taught part-time in a pretty rough school (which was quite exhausting) and even later at a much fancier private school (which wasn't as exhausting, but much less fulfilling) and finally I went into tutoring and also ran a related company. I wound this business up when covid started.

Over the years I found that, naturally, the smaller the class, the more disproportional impact you can have when teaching. I also found that that personal impact goes up exponentially not when I teach directly, but zoom out and find out what it is the student actually needs (especially adult students), and help them unblock those problems for themselves. As the proverb goes,

"Give a man a fish, and you feed him for a day. Teach a man to fish, and you feed him for a lifetime."

There's also a law of diminishing returns at play here. By far the biggest impact you can have when guiding someone to reach their goals (academic or otherwise) comes at the very start. This immediate impact has gotten bigger and bigger over time as I've learned more and more myself. Sometimes it's a case of simply reorienting a person, and sending them on their way, rather than holding their hand throughout their whole journey.

This is how I got into mentoring. I focused mainly on supporting budding entrepreneurs and developers from underpriviledged groups, mainly organically through real-life communities, but also through platforms like Underdog Devs, ADPList, Muslamic Makers and a handful of others. If you do this for free (which I was), you can only really do on the side, with a limited amount of time. I wasn't very interested in helping people who could actually afford paying me for my time, paradoxically enough...

I decided recently that there ought to be an optimal middle ground that maximises impact. 1:1 mentoring just doesn't scale, and large workshop series aren't effective. I wanted to test a pipeline of smaller cohorts and mix peer-based support with standard coaching. I have friends who I've worked with before who are willing to help with this, and I think I can set up a system that would be very, very cheap and economically accessible to the people I care about helping.

Anyway, I've started planning a funnel, and building a landing page. Of course, any landing page worth its salt ought to have social proof. So I took to LinkedIn. I never post to LinkedIn (in fact, this might actually have been my very first post in the ~15 years I've been on there). I found a great tool for collecting testimonials in my toolbox called Famewall, set up the form/page, and asked my LinkedIn network to leave me testimonials.

There were a handful of people that I thought would probably respond, but I was surprised that instead other people I completely hadn't expected were responding. In some cases, people that I genuinely didn't remember, and in other cases people where I didn't realise just how much of an impact I had on them. This was definitely an enlightening experience!

I immediately hit the free tier limit of Famewall and had to upgrade to a premium tier to access newer testimonials that were rolling in. It's not cheap, and I'm only using a tiny fraction of the features, but the founder is a fellow indie hacker building it as a solo project and doing a great job, and we chatted a bit, so I figured I should support him.

I cancelled my subscription a few days later when I got around to re-implementing the part that I needed on my own site. That's why this post is under the Website project; the review link (https://amar.io/review) now redirects to a bog standard form for capturing testimonials (with a nice Lottie success animation at the end, similar to Famewall) and in the back end it simply writes the data to disk, and notifies me that there's a new testimonial to review. If it's ok, I tweak the testimonial JSON and trigger an eleventy rebuild (this is a static site). In the future, I might delegate this task to Sentinel!

The testimonials then show up on this page, or any other page onto which I include testimonials.njk (like the future mentoring landing page). For the layout, I use a library called Colcade which is a lighter alternative to Masonry recommended to me by ChatGPT when I asked for alternatives, after Masonry was giving me some grief. It works beautifully!

Amarbot merges into my cyborg self

Amarbot no longer has a WhatsApp number. This number now belongs to Sentinel, the custodian of Sanctum.

This number was originally wired up directly to Sanctum functions, as well as Amarbot's brain; a fine-tuned GPT-J model trained on my chat history. Since this wiring was through Matrix it became cumbersome to have to use multiple Matrix bridges for various WhatsApp instances. I eventually decided use that model on my actual personal number instead, which left Amarbot's WhatsApp number free.

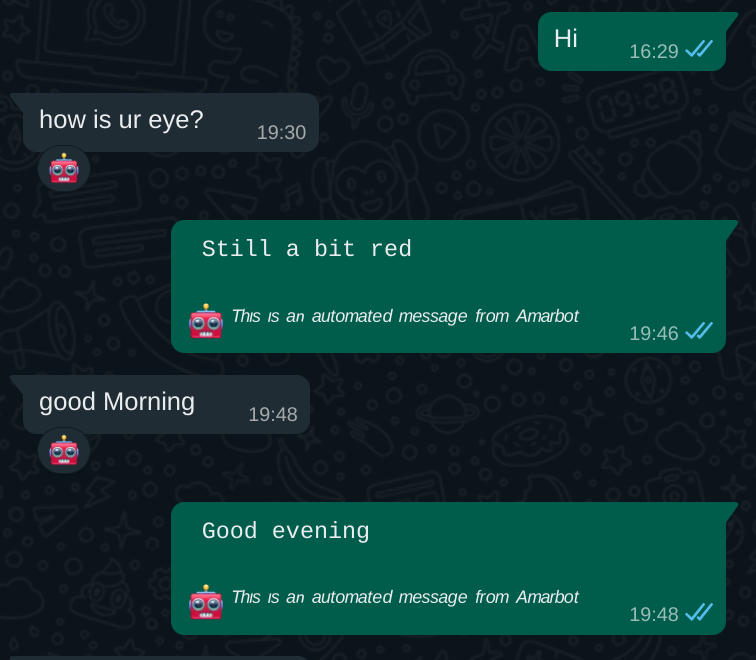

Whenever Amarbot responds on my behalf, there's a small disclaimer. This is to make it obvious to other people whether it's actually me responding or not, but also so when I retrain, I can filter out artificial messages from the training data.

Sentinel: my AI right hand

I mentioned recently that I've been using OpenAI's new functions API in the context of personal automation, which is something I've explored before without the API. The idea is that this tech can short-circuit going from a natural language command, to an actuation, with nothing else needed in the middle.

The natural language command can come from speech, or text chat, but almost universally, we're using conversation as an interface, which is probably the most natural medium for complex human interaction. I decided to use chat in the first instance.

Introducing: Sentinel, the custodian of Sanctum.

No longer does Sanctum process commands directly, but rather is under the purview of Sentinel. If I get early access to Lakera (the creators of Gandalf), he would also certainly make my setup far more secure than it currently is.

I repurposed the WhatsApp number that originally belonged to Amarbot. Why WhatsApp rather than Matrix? So others can more easily message him -- he's not just my direct assistant, but like a personal secretary too, so e.g. people can ask him for info if/when I'm busy. The downside is that he can't hang out with the other Matrix bots in my Neurodrome channel.

A set of WhatsApp nodes for Node-RED were recently published that behave similarly to the main Matrix bridge for WhatsApp, without all the extra Matrix stuff in the way, so I used that to connect Sentinel to my existing setup directly. The flow so far looks like this:

The two main branches are for messages that are either from me, or from others. When they're from others, their name and relationship to me are injected into the prompt (this is currently just a huge array that I hard-coded manually into the function node). When it's me, the prompt is given a set of functions that it can invoke.

If it decides that a function should be invoked, the switchResponse node redirects the message to the right place. So far, there are only three possible outcomes: (1) doing nothing, (2) adding information to a list, and (3) responding normally like ChatGPT. I therefore sometimes use Sentinel as a quicker way to ask ChatGPT one-shot questions.

The addToList function is defined like this:

{

name: "addToList",

description: "Adds a string to a list",

parameters: {

type: "object",

properties: {

text: {

type: "string",

description: "The item to add to a list",

},

listName: {

type: "string",

description: "The name of the list to which the item should be added",

enum: [

"movies",

"books",

"groceries",

]

},

},

required: ["text", "listName"],

},

}I don't actually have a groceries list, but for the other two (movies and books), my current workflow for noting down a movie to watch or a book to read is usually opening the Obsidian app on my phone and actually adding a bullet point to a text file note. This is hardly as smooth as texting Sentinel "Add Succession to my movies list". Of course, Sentinel is quite smart, so I could also say "I want to watch the first Harry Potter movie" and he responds "Added "Harry Potter and the Sorcerer's Stone" to the movies list!".

The actual code for adding these items to my lists is by literally appending a bullet point to their respective files (I have endpoints for this) which are synced to all my devices via the excellent Syncthing. In the future, I could probably make this fancier, e.g. query information about the movie/book and include a poster/cover and metadata, and also potentially publish these lists.

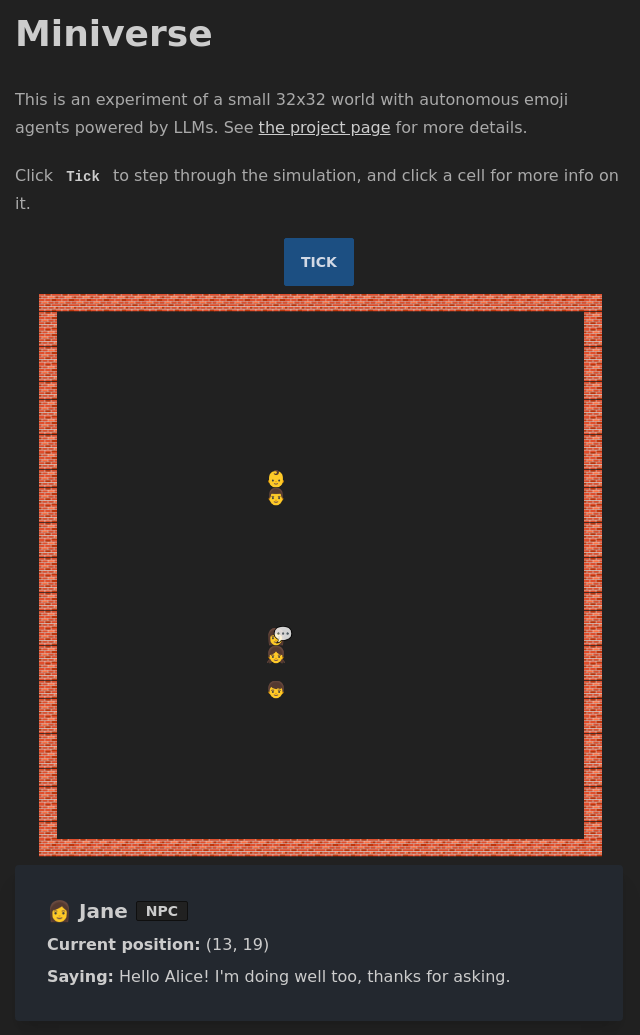

Building the Miniverse

I've been experimenting with OpenAI's new functions API recently, mostly in the context of personal automation, which is something I've explored before without the API (more on that in the future). However, something else I thought might be interesting would be to give NPCs in a virtual world a more robust brain, like the recent Stanford paper. This came in part from the thinking from yesterday's post.

The Stanford approach had many layers of complexity and they were attempting to create something that is close to real human behaviour. I'm less interested in that, and would instead like to design an environment with much higher constraints based on simple rules. I think finding the right balance there leads to the most interesting emergent results.

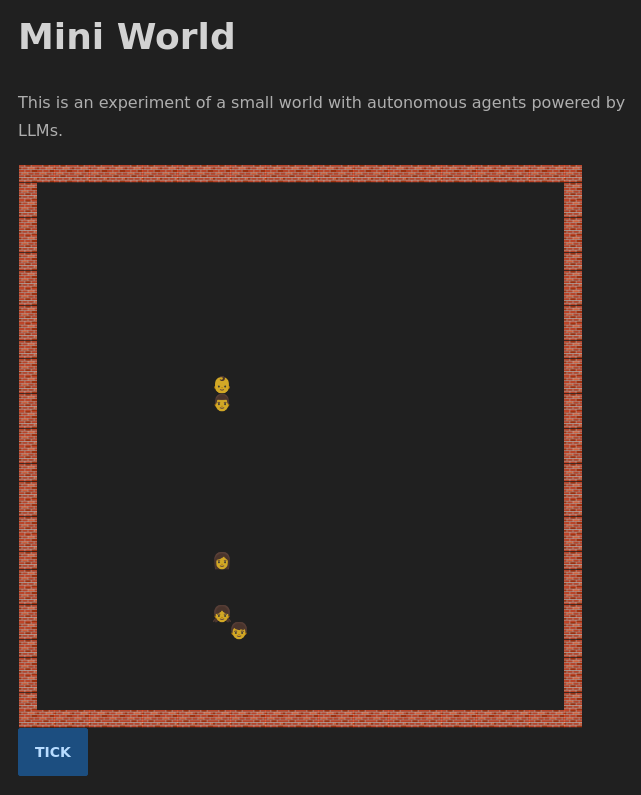

So my first goal was to create a very tightly scoped environment. I decided to start with a 32x32 grid, made of emojis, with 5 agents randomly spawned. The edge of the grid is made of walls so they don't fall off.

Agents after they had walked towards each other for a chat

Agents after they had walked towards each other for a chat

When I was originally scoping this out, I thought I would add mechanisms for interacting with items too. These items could be summoned in some way perhaps. I built a small API for getting the nearest emoji to item text as well, which is still up at e.g. https://gen.amar.io/emoji/green apple (replace "green apple" with whatever). It also caches the emojis so overall not expensive to run.

I also explored various models for generating emoji-like images, for the more fantastical items, and landed on emoji diffusion. It was at this point that I quickly realised I'm losing control of the scope, and decided to focus on NPCs only, and no items.

Each simulation step (tick) would iterate over all agents and compute their actions. I planned for these possible actions:

- Do nothing

- Step forward

- Step back

- Step left

- Step right

- Say X

- Remember X (doesn’t end round)

I wanted the response from OpenAI to only be function calls, which unfortunately you can't control, so I had to add to the prompt You MUST perform a single function in response to the above information. If I get any bad response, we either retry or fall back to "do nothing", depending.

The prompt contained some basic info, a goal, the agent's surroundings, the agent's memory (a truncated list of "facts"), and information on events of the past round. I found that I couldn't quite rely on OpenAI to make good choices, so I selectively build the list of an agent's capabilities on the fly each tick. E.g. if there's nobody in speaking distance, we don't even give the agent the ability to speak. If there's a wall ahead of the agent, we don't even give the agent the chance to step forward. And if the agent just spoke, they lose the ability to speak again for the following round or else they speak over each other.

I had a lot of little problems like that. Overall, the more complicated the prompt, the more off the rails it goes. Originally, I tried The message from God is: "Make friends" as I envisioned interaction from the user coming in the form of divine intervention. But then some of the agents tried speaking to God and such, so I replaced that with Your goal is: "Make friends", and later Your goal is: "Walk to someone and have interesting conversations" so they don't just walk randomly forever.

They would also feel compelled to try and remember a lot. Often the facts they remembered were quite useless, like the goal, or their current position. The memory was small, so I tried prompt engineering to force them to treat memory as more precious, but it didn't quite work. Similarly, they would sometimes go on endless loops remembering the same useless fact over and over. I originally had all information in their memory (like their name) but I didn't want them to forget their name, so put the permanent facts outside.

Eventually, I removed the remember action, because it really wasn't helping. They could have good conversations, but everything else seemed a bit stupid, like I might as well program it procedurally instead of with LLMs.

I did however focus a lot on having a very robust architecture for this project, and made all the different parts easy to build on. The server does the simulation (in the future, asynchronously, but today, through the "tick" button) and stores world state in a big JSON object that I write to disk so I can rewind through past states. There is no DB, we simply read/write from/to JSON files as the world state changes. The structure of the data is flexible enough that I don't need to modify the schemas, and it can remain pretty forwards-compatible as I make additions, so I can run the server off of older states and it picks up those states gracefully.

Anyway, I'll be experimenting some more and writing up more details on the different parts as they develop!

I can finally sing

Ever since I discovered the Discord server for AI music generation, I knew I needed to train a model to make my voice a great singer. It took some figuring out, but now I'm having a lot of fun making myself sing every kind of song. I've tried dozens now, but here are some ones that are particularly notable or fun (I find it funniest when things glitch out especially around the high notes):

Project grid

When people visit my website, it's not very clear to them what it is I actually do. It used to be that my website doubled as my CV, but after a while that became sort of useless as I no longer needed to apply to jobs, and I stopped maintaining it.

Recently, I began revamping the landing page of my website (yet again) and started off by cleaning up the hero section. I had a bunch of icons that represented links, and thought I was being clever and language-agnostic by using icons instead of text, but then realised that even I was forgetting what icon meant what, so I couldn't begin to hope that others would know what they meant. So I added text. And I made the WebGL avatar have an image fallback in case the browser doesn't support WebGL. Similarly, I froze the parallax effects under the same conditions as those rely on hardware acceleration.

Then, I decided to create a project grid right under the hero. I wanted to treat this as a showcase of the things I am involved in, or have been involved in, loosely in order of importance. This has been inspired by:

- Philipp Lenssen, from whom I originally got the idea, and realised the tiles don't even really need to strictly be "projects" but can be whatever you want

- Alyssa X, from whom I got the idea for video preview banners, although I opted to make them autoplay, instead of on mouseover, for mobile-friendliness

- Art Chaidarun, from whom I got the idea of masonry-style tiling, and I might potentially also do those cool stack filters

I began by adding the most important stuff for now, and might add some more over time. I keep pretty good notes of everything I do, so this list could become very very long, as I've worked on a lot of things over time. I realised that a few, cool, recent things are more meaningful than many, old, arbitrary things, so I'll try not to make this grid too large, and link to the full directory of projects (at least the ones that have made their way online) in the last tile.

It would have also been quite boring I think if I listed all my publications, as they're largely related. The same holds true for every weekend project, hackathon, game jam, utility script, game mod, etc. The projects I worked on during university I think are similarly just too old now. I also left out my volunteering work because it felt a bit too vain to include, and I'm not sure it would actually spur on any meaningful conversation. I also left out the things where I don't have significant enough involvement.

Please let me know your thoughts on the above and/or on how to improve this!

Bonsai diversification

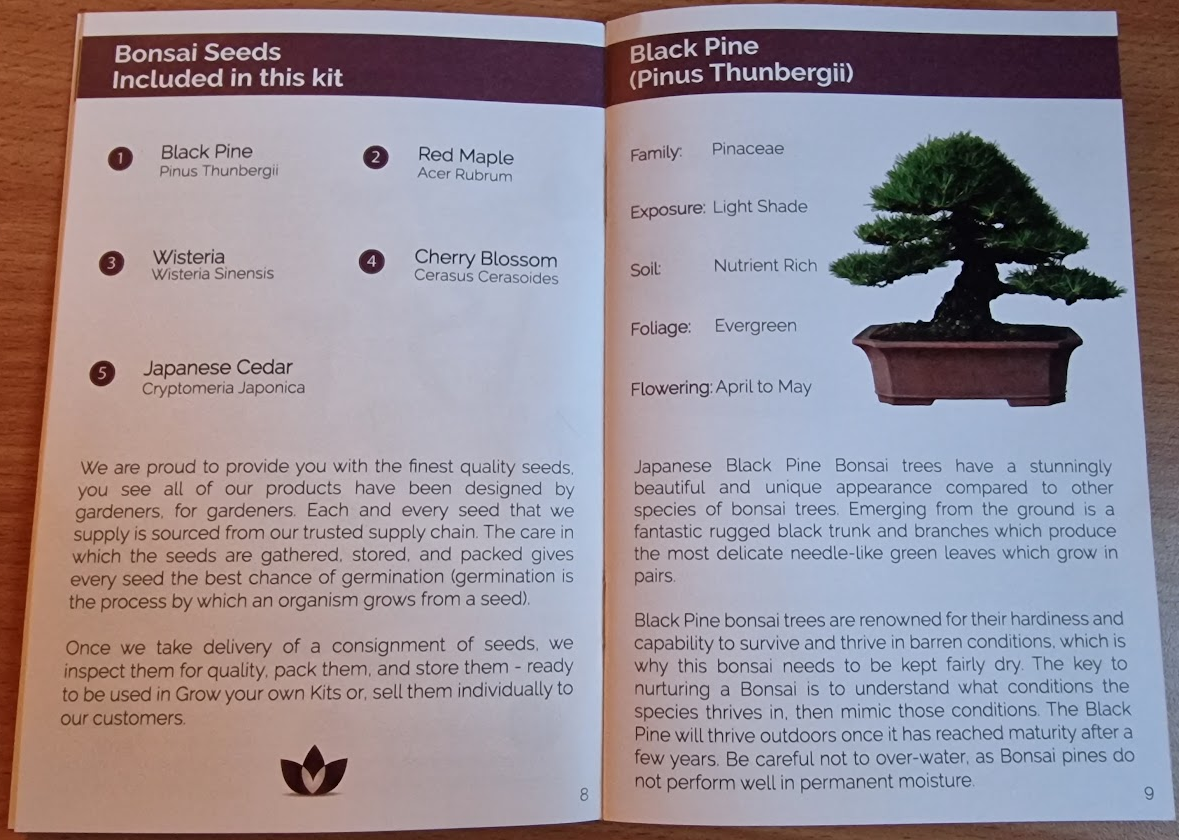

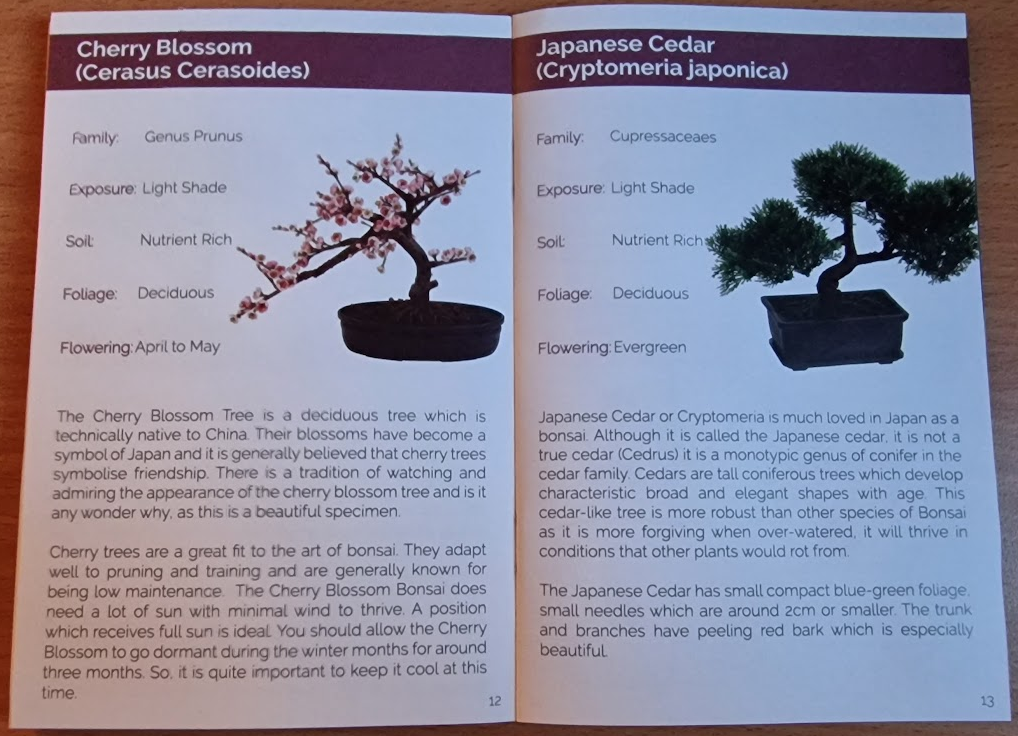

My Red Maple and Wisteria seeds haven't sprouted yet, but I was left with all this extra soil! So I decided that I ought to plant the other species too. The remaining seeds I have are for Black Pine, Cherry Blossom, and Japanese Cedar. This is what they look like respectively:

I only had three Cherry Blossom seeds, and unlike the Red Maple, I decided to only plant one seed in that pot. Besides that, I've largely only used half of the seeds I have of each species so far, and I'm thinking that even that is unnecessary, but let's see!

As I was soaking them for 48 hours, they kind of got mixed up a bit, and I had a bit of a challenge separating the Black Pine from the Cherry Blossom, but I think I got there in the end. To better keep track of everything, as I was really starting to forget which is which, I put in some little wooden sticks:

The soil had dried quite a bit, so I made it wetter, maybe even a little too wet, as it was soaking the cotton on the bottom and created some condensation on the plastic. I also used tap water, which I didn't do for the first two, as it's pretty hard / rich in calcium. For my tomato plant, the effects of this were soon obvious as calcium residue was visible on the top of the soil and edges of the soil where it meets the pot. I didn't want to have the same for these plants, but it should hopefully all be OK.

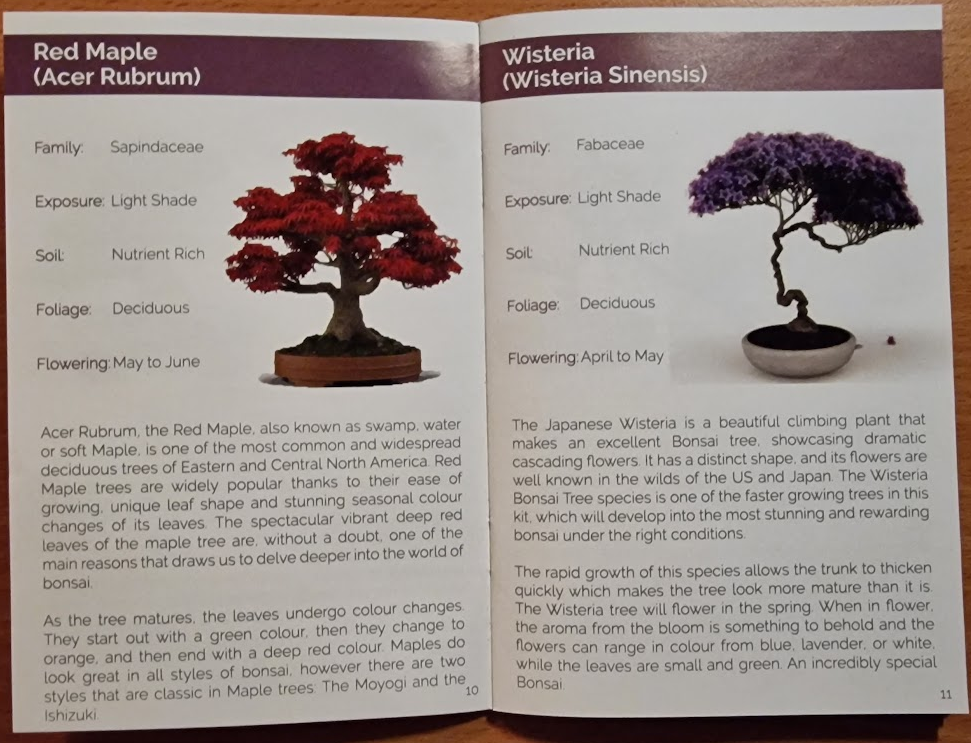

If you'd like to learn some more about each species, here are their sections in my little book:

So now we have 5 different pots stratifying -- let's see which sprout first!

Bonsai sowing and stratification

My bonsai seeds have soaked for 48 hours and I'm ready to move on to the next step! The reveal: I picked Wisteria and Red Maple. They ticked all the right boxes for me as my first try.

The Wisteria seeds are the small ones and the Red Maple are the two big ones. I used half of the seeds that I had of each species.

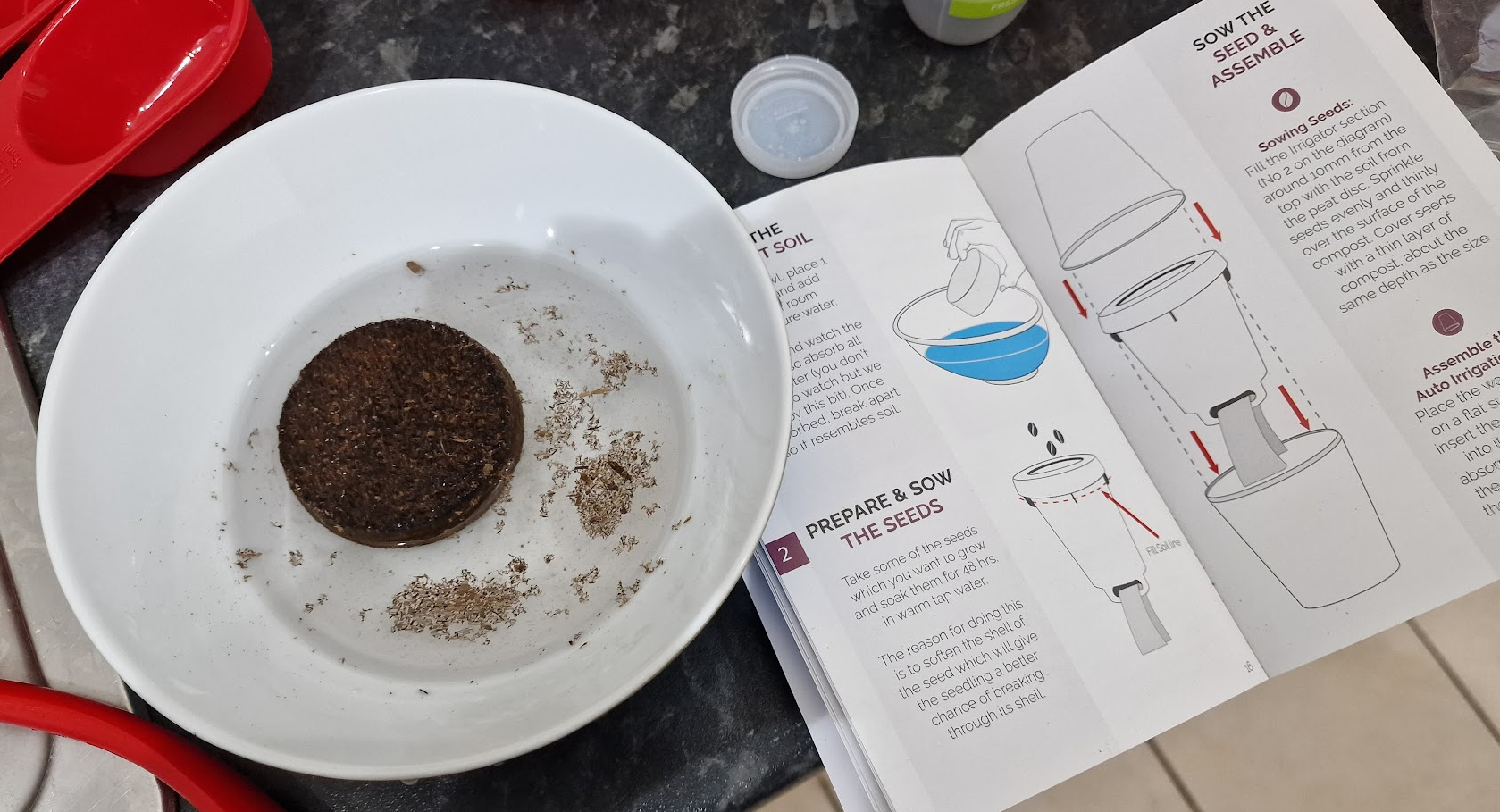

I assembled the "Auto Irrigation Growing Pot" and tried to ignore the conflicting instructions. I think you're not meant to fill the reservoir with any water at all until after the Stratification step (which I'll explain in a sec), and it's ambiguous how deep the seeds should go beyond "same depth as the size" (the size of what, the seeds?), so I just used my best judgement.

It turns out that I actually have a lot of soil. I didn't even use up a full peat disc so far. I have three more pots, so I'm considering getting some more seedlings started in the meantime and increase the chances of success...

At any rate, I sowed the current seeds and sprinkled a tiny bit of water into the soil to keep it moist, as it had dried out a bit in the meantime. I don't think the instructions should have the soil bit as step 1 if you're then going to soak the seeds for 48 hours after that, it should really be the second step.

And now that they're sown, I put them in the fridge. In the fridge, the one on the left is the Red Maple (this is more of a note to myself -- I should label them really; there are little wooden sticks for that in the kit). Putting them in the fridge is the first part of the Stratification step, which is meant to simulate winter conditions, then spring, so that they can germinate as they would in nature.

I'll be checking on them every few days and keeping the soil damp. Hopefully in two or three weeks they will start sprouting and I can remove them from the fridge. I set some calendar events. So now we wait!

Banzai, bonsai!

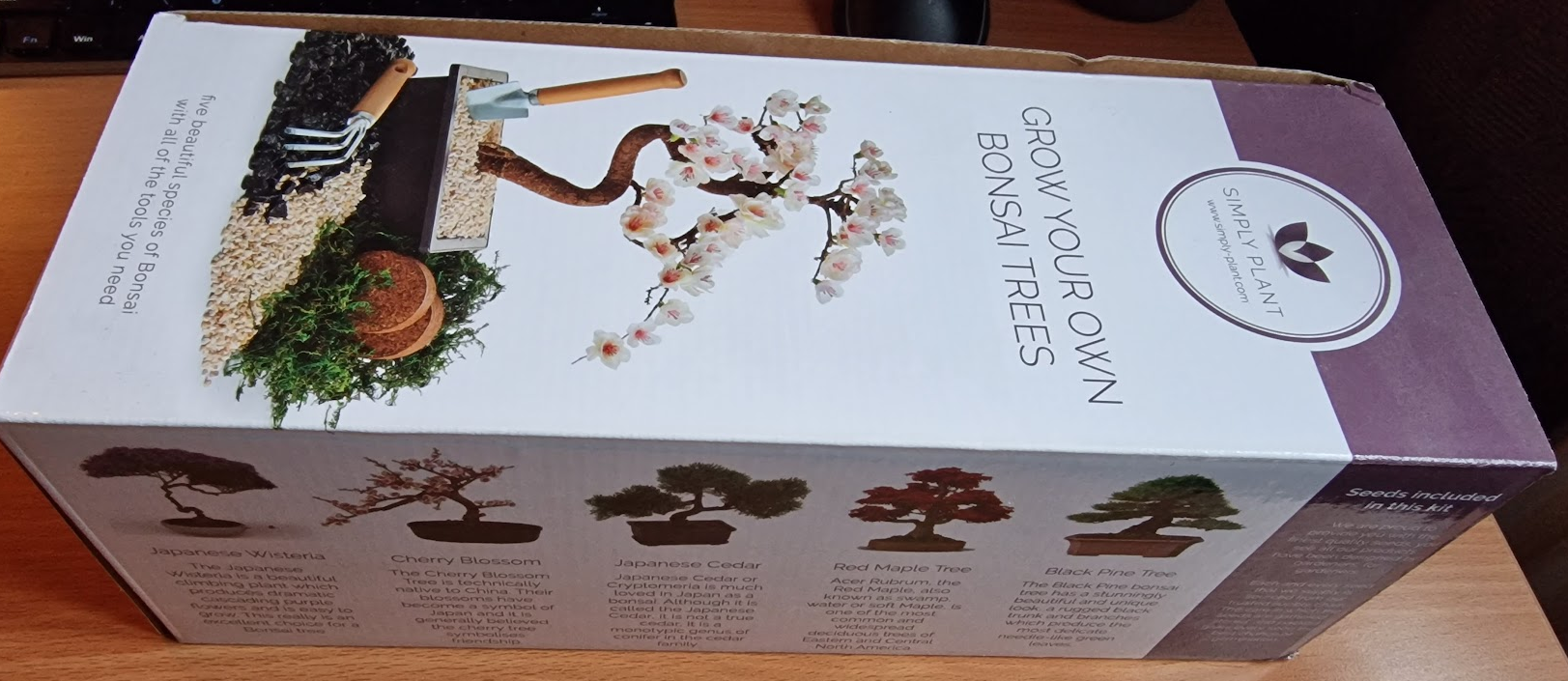

I finally decided to start on my bonsai project. To read more about what this is all about, check out the project page. I haven't written anything about the tomato project, or any of the other (failed) horticulture projects, but I will eventually, since documenting failures is important too! This is the first log of what is probably going to be rather perennial chronicles.

The kit that I'm using to get a start with bonsai is really quite neat. It comes with 5 different species of seeds: Japanese Wisteria, Cherry Blossom, Japanese Cedar, Red Maple Tree, and Black Pine Tree.

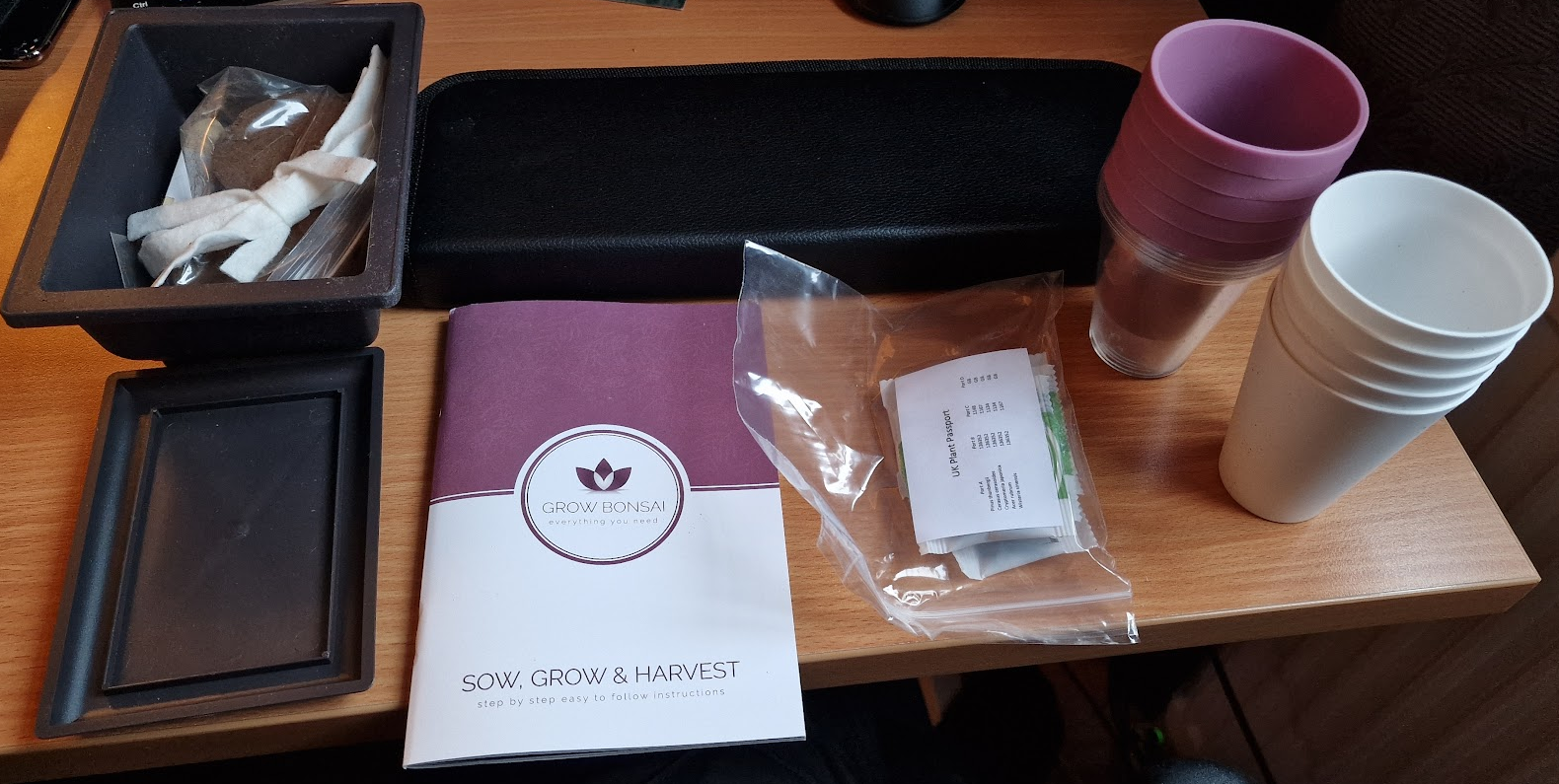

This is a great set of tools in such a small package and I'm quite excited! The instruction booklet goes into a decent amount of detail, though I already know a bunch from YouTube and other places as I had a general interest in bonsai before deciding to try myself.

It came with two peat pucks that you put in some water and watch as they slowly grow while they absorb the water.

I decided to do both of them, as I wanted to try multiple species at the same time, and they grow to about 3x their original size! It's actually quite a lot of soil.

I then decided on two species that I wanted to grow. The next step was to put some of the seeds in warm water for 48 hours, such that they can soften, which makes it easier for the seedling to break through the shell. The two that I picked had seeds that looked very distinct from each other!

If you would like to know what species I picked, check back in 48 hours when I document the sowing process! I'll give you a hint: I didn't pick the mainstream choice (Black Pine).

Why certain GPT use cases can't work (yet)

Some weeks ago I built the "Muslim ChatGPT". From user feedback, I very quickly realised that this is one use case that absolutely won't work with generative AI. Thinking about it some more, I came to a soft conclusion that at the moment there are a set of use cases that are overall not well suited.

Verifiability

There's a class of computational problems with NP complexity. What this means is not important except that these are hard to solve but easy to verify. For example, it's hard to solve a Sudoku puzzle, but easy to check that it's correct.

Similarly, I think that there's a space of GPT use cases where the results can be verified with variable difficulty, and where having correct results is of variable importance. Here's an attempt to illustrate what some of these could be:

The top right here (high difficulty to verify, but important that the results are correct) is a "danger zone", and also where deen.ai lives. I think that as large language models become more reliable, the risks will be mitigated somewhat, but in general not enough, as they can still be confidently wrong.

In the bottom, the use cases are much less risky, because you can easily check them, but the product might still be pretty useless if the answers are consistently wrong. For example, we know that ChatGPT still tends to be pretty bad at maths and things that require multiple steps of thought, but crucially: we can tell.

The top left is kind of a weird area. I can't really think of use cases where the results are difficult to verify, but also you don't really care if they're super correct or not. The closest use case I could think of was just doing some exploratory research about a field you know nothing about, to make different parts of it more concrete, such that you can then go and google the right words to find out more from sources with high verifiability.

I think most viable use cases today live in the bottom and towards the left, but the most exciting use cases live in the top right.

Recall vs synthesis

Another important spectrum is when your use case relies on more on recall versus synthesis. Asking for the capital of France is recall, while generating a poem is synthesis. Generating a poem using the names of all cities in France is somewhere in between.

At the moment, LLMs are clearly better at synthesis than recall, and it makes sense when you consider how they work. Indeed, most of the downfalls come from when they're a bit too loose with making stuff up.

Personally, I think that recall use cases are very under-explored at the moment, and have a lot of potential. This contrast is painted quite well when comparing two recent posts on HN. The first is about someone who trained nanoGPT on their personal journal here and the output was not great. Similarly, Projects/amarbot used GPT-J fine-tuning and the results were also hit and miss.

The second uses GPT-3 Embeddings for searching a knowledge base, combined with completion to have a conversational interface with it here. This is brilliant! It solves the issues around needing the results to be as correct as possible, while still assisting you with them (e.g. if you wanted to ask for the nearest restaurants, they better actually exist)!

Somebody in the comments linked gpt_index so you can do this yourself, and I really think that this kind of architecture is the real magic dust that will revolutionise both search and discovery, and give search engines a run for their money.

Sanctum project and Christmas Giveaway

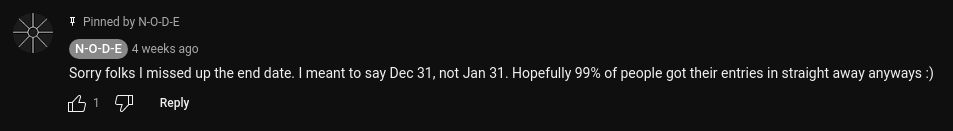

Welp, looks like I'm a month late for the N-O-D-E Christmas Giveaway. You might be thinking "duh, Christmas is long gone", and I also found it weird that the deadline was the 31st of January, but it turns out that that was a mistake in the video and he corrected it in the comments.

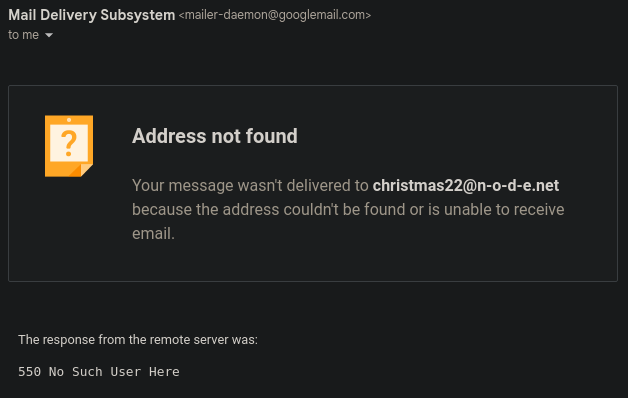

Since I keep up with YouTube via RSS, I didn't see that comment until it was too late. I only thought to check again when my submission email bounced.

Oh well! At least it gave me a reason to finally write up my smart home setup! This also wasn't the first time that participating in N-O-D-E events really didn't work out for me -- in 2018 I participated in the N-O-D-E Secret Santa and sent some goodies over to the US, and really put some effort into it I remember. Unfortunately I never got anything back which was a little disappointing, but hey, maybe next time!

Building a real-life Star Trek communicator

I've been planning to start this project for a while, as well as document the journey, but never really got around to it. I had a calendar reminder that tomorrow the N-O-D-E Christmas Giveaway closes, which finally gave me the kick in the butt needed to start this one! I also want to use this as an opportunity to create short-form videos on TikTok to learn more about it (in this case, documenting the journey). The project page is here.

ChatGPT as an Islamic scholar

Last weekend I built a small AI product: https://deen.ai. Over the course of the week I've been gathering feedback from friends and family (Muslim and non-Muslim). In the process I learned a bunch and made things that will be quite useful for future projects too. More info here!

Fine-tuning GPT-J online without spending a lot of money

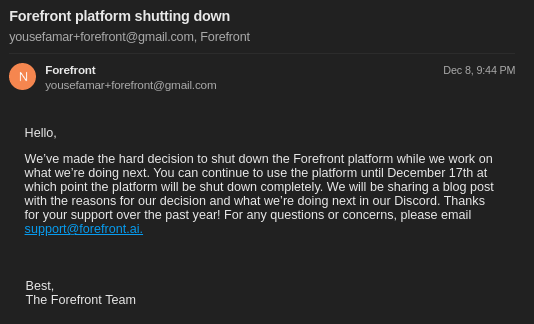

Amarbot was using GPT-J (fine-tuned on my chat history) in order to talk like me. It's not easy to do this if you follow the instructions in the main repo, plus you need a beefy GPU. I managed to do my training in the cloud for quite cheap using Forefront. I had a few issues (some billing-related, some privacy-related) but it seems to be a small startup, and the founder himself helped me resolve these issues on Discord. As far as I could see, this was the cheapest and easiest way out there to train GPT-J models.

Unfortunately, they're shutting down.

As of today, their APIs are still running, but the founder says they're winding down as soon as they send all customers their requested checkpoints (still waiting for mine). This means Amarbot might not have AI responses for a while soon, until I find a different way to run the model.

As for fine-tuning, there no longer seems to be an easy way to do this (unless Forefront open sources their code, which they might, but even then someone has to host it). maybe#6742 on Discord has made a colab notebook that fine-tunes GPT-J in 8-bit and kindly sent it to me.

I've always thought that serverless GPUs would be the holy grail of the whole microservices paradigm, and it might be close, but hopefully that would make fine-tuning easy and accessible again.

Amarbot now has his own number

It's official — Amarbot has his own number. I did this because I was using him to send some monitoring messages to WhatsApp group chats, but since it was through my personal account, it would mark everything before those messages as read, even though I hadn't actually read them.

My phone allows me to have several separate instances of WhatsApp out of the box, so all I needed was another number. I went for Fanytel to get a virtual number and set up a second WhatsApp bridge for Matrix. Then I also put my profile picture through Stable Diffusion a few times to make him his own profile picture, and presto: Amarbot now has his own number!

In case the profile picture is not clear enough, the status message also says that he's not real. I have notifications turned off for this number, so if you interact with him, don't expect a human to ever reply!

Amarbot connected to WhatsApp

As of today, if you react to a message you send me on WhatsApp with a robot emoji (🤖), Amarbot will respond instead of me. As people in the past have complained about not knowing when I'm me and when I'm a bot, I added a very clear disclaimer to the bottom of all bot messages. This is also so I can filter them out later if/when I want to retrain the model (similar to how DALL-E 2 has the little rainbow watermark).

The reason I was able to get this to work quite easily is thanks to my existing Node-RED setup. I'll talk more about this in the future, but essentially I have my WhatsApp connected to Matrix, and Node-RED also connected to Matrix. I watch for message reactions but because those events don't tell you what the actual text of the message is that was reacted to was, only the ID, I store a small window of past messages to check against. Then I query the Amarbot worker with the body of that message and format and respond with the reply.

This integrates quite seamlessly with other existing logic I had, like what happens if you ask me to tell you a joke!

Amarbot trained on WhatsApp logs

Amarbot has been trained on the entirety of my WhatsApp chat logs since the beginning of 2016, which I think is when I first installed it. There are a handful of days of logs missing here and there as I've had mishaps with backing up and moving to new phones. It was challenging to extract my chat logs from my phone, so I wrote an article about this.