Log #all

This page is a feed of all my posts in reverse chronological order. You can subscribe to this feed in your favourite feed reader through the icon above.

Amar Memoranda > Log (all)

Yousef AmarThoughts on the future of software

The way I like to speculate about the future of software is by imagining that you have infinite engineering resources. The other day, someone mentioned that they don't want to try PicoClaw (or any of the other spin-offs) because they'll miss out on cool features of the biggest project with the most contributions.

My uncontroversial prediction is that there will be a lot more hyper-personalised software, even products for single users, because that problem will go away. Agents will watch other projects for updates, or the internet for cool ideas, and instantly implement them. Commercial software in competitive spaces will quickly reach feature-parity and stay there, and it'll be harder to differentiate.

A lot of open source software today struggles because the commercial models around them don't work. People can donate money to the developers sometimes (very little in practice) or their time through contributions. A lot of open source software stalls and dies.

With infinite engineering resource, even if it's not completely free, there may be a commercial model where people share the token costs, instead of paying a subscription for a product. Then as long as people keep contributing, features keep getting developed. The more users a product has, the cheaper it becomes to develop.

The downside is there will be a pressure not to fork that software, because the userbase gets reset to 1. It's open source, but instead of donating to devs, or donating your time, you're donating tokens. But if you fork because the maintainers don't like your feature suggestion, and you want it anyway, suddenly you (or rather your agents) have to maintain that fork.

However, I suspect that this will become extremely cheap, in the same way that storage has become cheap. Cost will not be the bottleneck -- you'll be able to clear your product backlog faster than you can fill it. So the asymptote here is that there just won't be any maintainers anymore except your agents, making you your own personal software suite.

My hope is that there will be better interoperation between all this sprawl of software. It's hard to predict as I think the interfaces will change drastically (especially agent-to-agent communication). Collaborative software or social media may be the last to go, as they still have reasons for being unified (technical, or because of network effects and intentional walled-garden-ness).

Data is the only real moat left for SaaS founders. Speaking of data, I think data brokers are in big trouble if everyone starts building their own consumer apps. I would say this is overall a good thing.

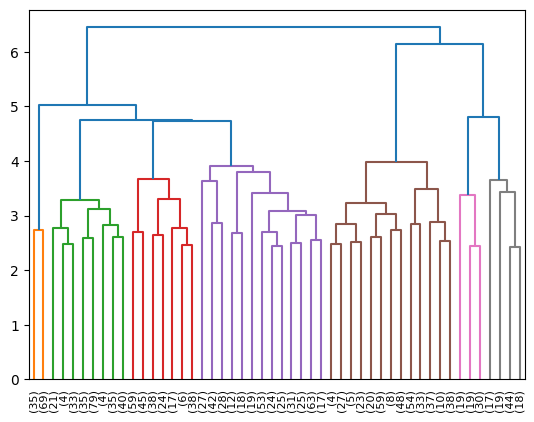

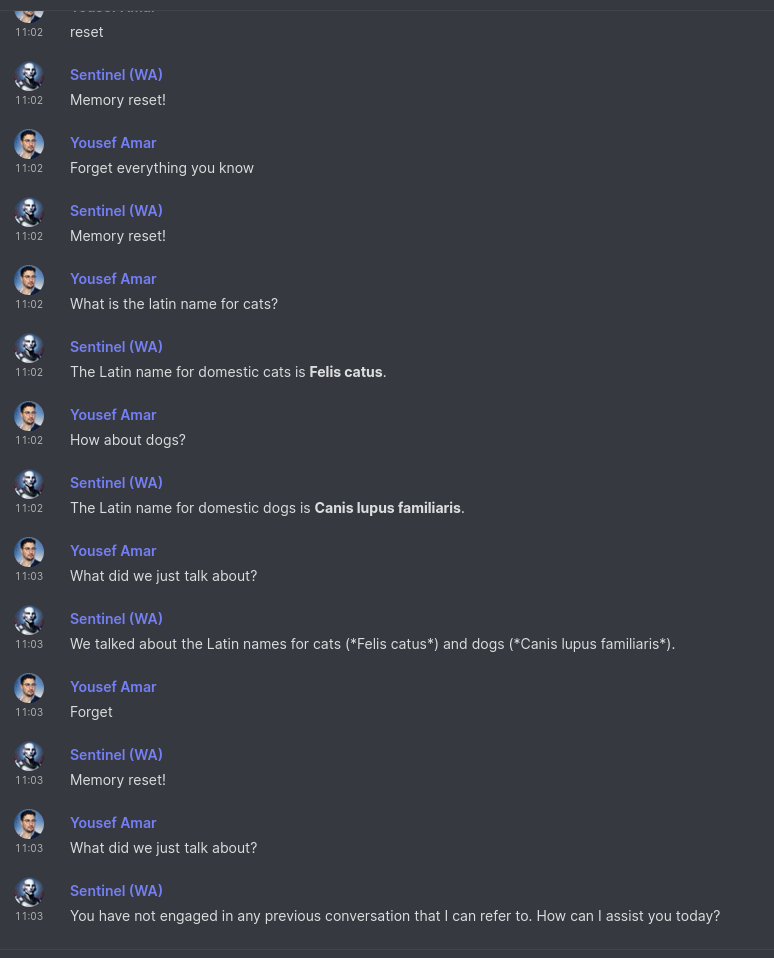

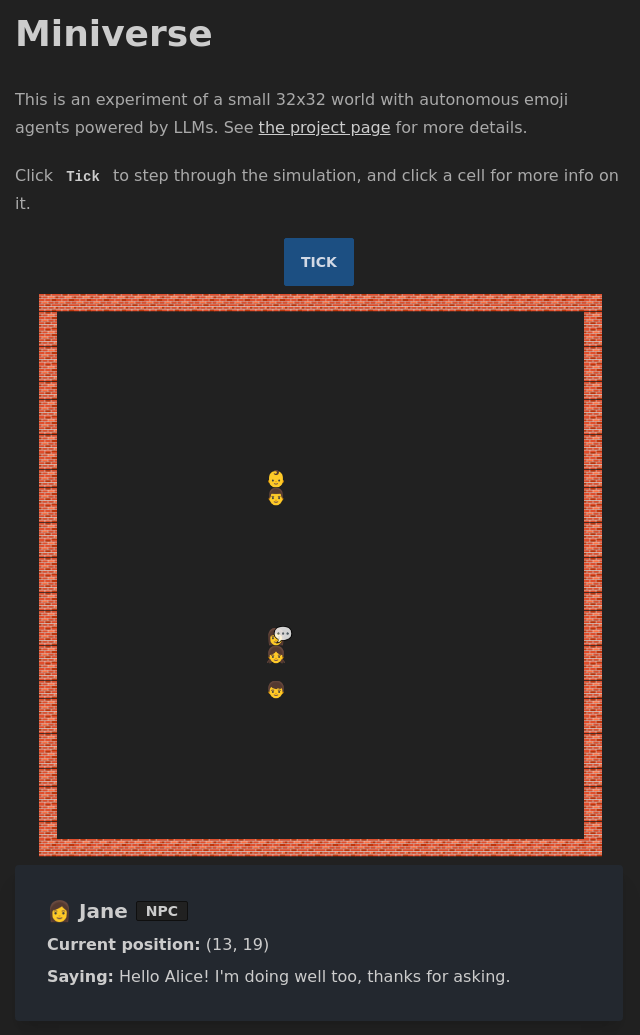

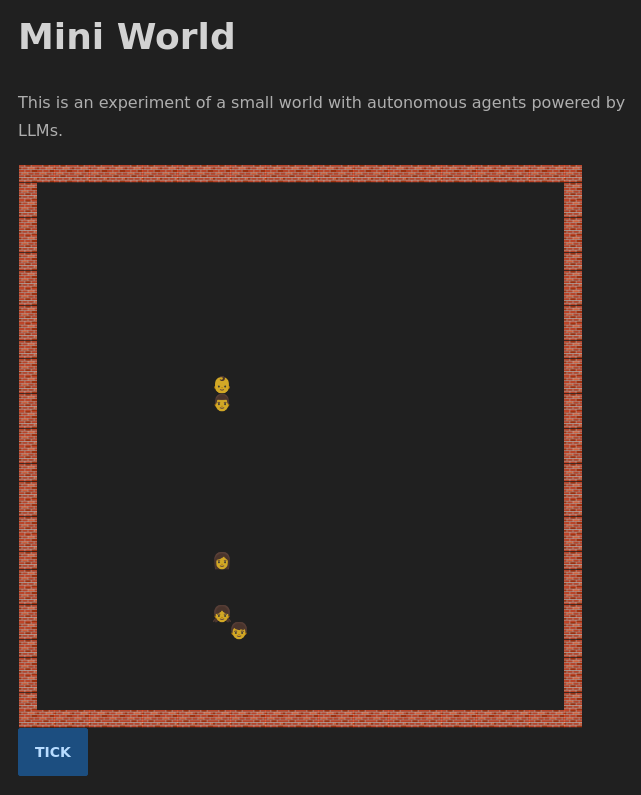

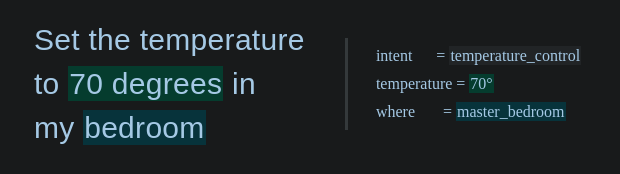

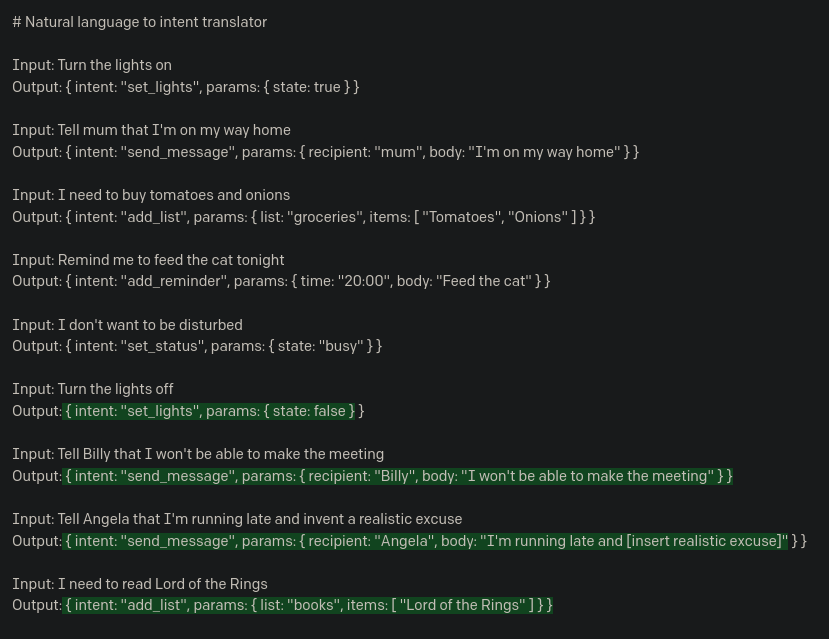

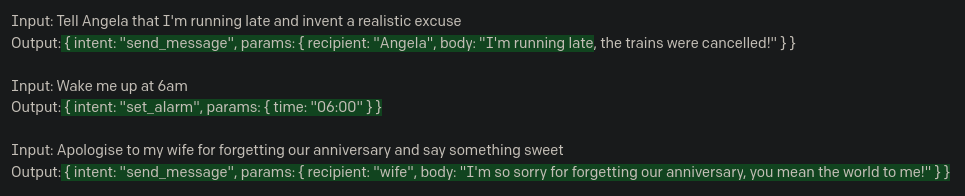

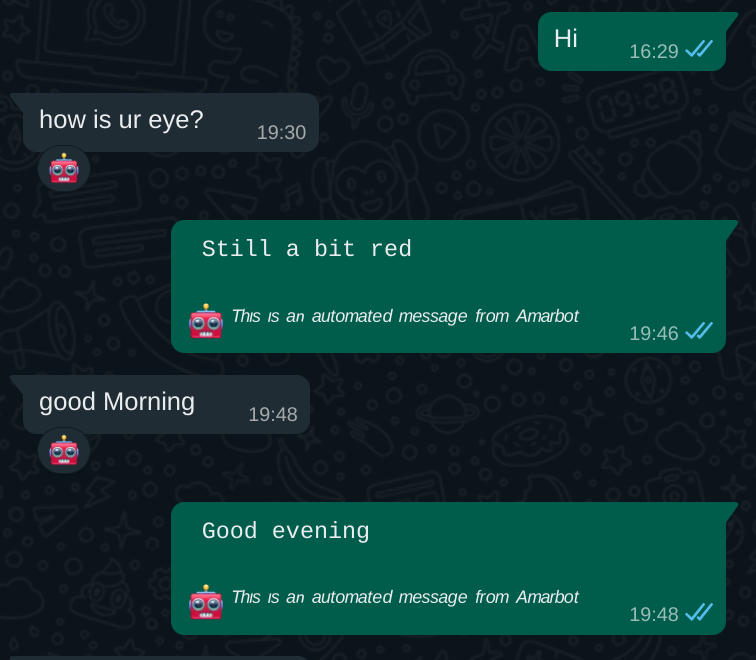

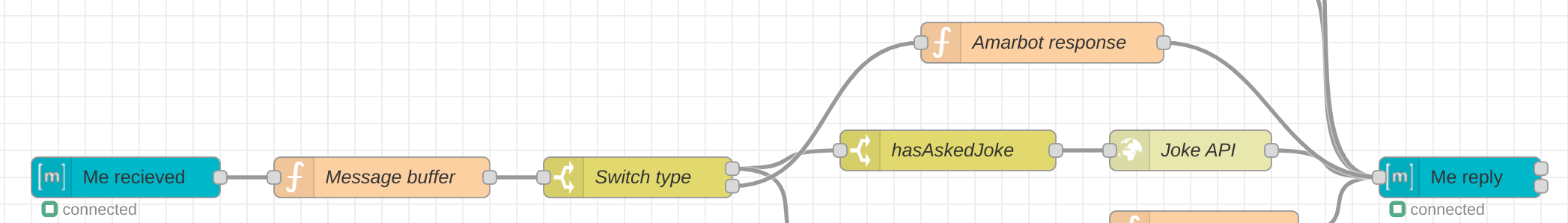

Sentinel is now Al

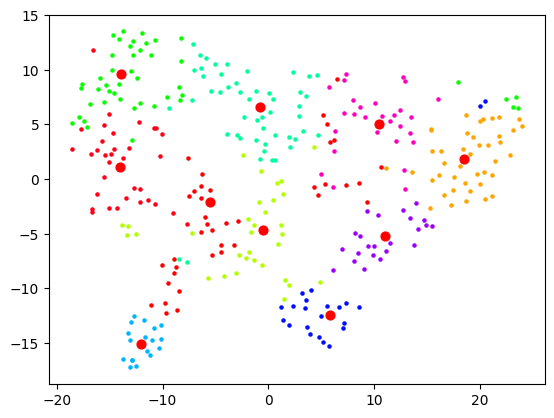

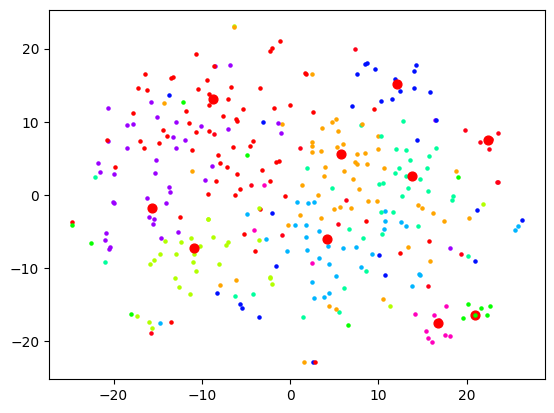

It's been a while since I've written about the bot formerly known as Sentinel. It has continued to evolve, with the most significant change being a switch from Node-RED to n8n. Over the past couple weeks, there has been an even more fundamental shift however: a brain transplant. One that has prompted me to rename Sentinel to Al (that's Al with an L).

The fact that it looks like AI with an i is a coincidence -- it comes from the Arabic word for "machine", and I also decided to start referring to Al as "him" rather than "it" for convenience. Rather than an "assistant", I position Al as an digital extension of myself and the orchestrator of my exocortex[1], while I'm his meatspace extension.

The big shift is that I've jumped on the OpenClaw bandwagon! People have mixed views on OpenClaw, but I can say that overall I find it quite exciting. I, like many people, have fully embraced the use of Claude Code, and have been trying to retrofit it to do more than simply build software. OpenClaw looks like the beginning of an ecosystem that allows us all to do just that without all rolling our own disparate versions.

OpenClaw embraces a concept that I find so fundamental as to be laughably obvious: the interface to chat bots should be existing chat apps. This is why Sentinel and Amarbot had their own phone numbers. While I have used Happy (and later HAPI, self-hosted on https://hapi.amar.io) in order to access my Claude Code sessions from mobile without the insanity of a mobile terminal, even those felt like anti-patterns. You can read more on why I think this is in my post on why we should interact with agents using the same tools we use for humans, as well as my post on agent chat interfaces in general.

For the record, I think these mode of interaction are inevitable, not a preference. While AI will interface with things via APIs, raw text, or whatever else, the bridge between AI and human will be the same tools as between human and human. This is also why I think the UIs purporting to be the "next phase" of agentic work where you manage parallel agents (opencode, conductor, etc) are the wrong path. The people who got this absolutely bang-on correct are Linear, with Linear for Agents, and I don't just say that because I love Linear (I do). I should assign tickets to agents the same way I would a human, and have discussions with them on Linear or Slack, the same way I would a human.

So, that brings me to what I think OpenClaw is currently bad at. First, cron should not be used as a trigger. Calendars should. Duh. One of the first things I did was give Al his own calendar and set up appropriate hooks. We need to be using the same tools, and I never use cron. This gives me a lot of visibility over what's going on that is much more natural, and I can move around and modify these events in the way they're supposed to be: through a calendar UI, not through natural language conversation.

On the topic of visibility, workspace files need to be easily viewable and editable. By this I mean all the various markdown files that form the agent's memory and instructions. While I may not need to edit these and Al can do that on his own, if we do want to explicitly edit, it should be easy to do so, and natural language conversation is not the way (this is not code)! To solve this, I've put Al's brain into my Obsidian vault (yes, the very vault from which these posts are published!) and symlinked it into the actual OpenClaw directory. So now I have can browse these files with a markdown editor heavily optimised for me! An added bonus of this is that Al's brain is now replicated across all my devices and backups for free, using Syncthing which already covers my vault.

It's still early days, so I'm still finding out the best ways to collaborate with Al. There are still a lot of issues, mainly related to Al forgetting things, that I'm working through, but it's been great! He can do everything that Sentinel already could, including talk to other people independently and update my lists. The timing is perfect, as I pre-ordered a Pebble Index 01[2] and this will very likely be the primary way that I communicate with Al in the future when it comes to those one-way commands. For two-way, I still use my Even G1 smart glasses, but I suspect that I may go audio-only in the future (i.e. my Shokz OpenComm2 as I don't like having stuff in my ears).

I've scheduled some sessions with Al where we try and push each other to grow. For him, this means new capabilities and access to new things and various improvements. For me, this means learning about topics that Al has broken down into a guided course or literal coaching. I'll post more as I go along! In the meantime, you can also chat with him.

This is a term I took from Charles Stross' novel Accelerando which refers to the cloud of agents that support a human, and I was delighted to see the lobster theme in OpenClaw. I don't know if they were inspired by Accelerando, but sentient lobsters play a role! ↩︎

This device is controversial in its own right because of the battery that cannot be charged/replaced. When I watched the founder's video though, I was sold, as everything he said resonated. I suspect I won't use it for longer than 2 years anyway as I'll have probably moved on to something else by then. Incidentally, the founder is also the founder of Beeper, which I use for messaging, and which Al has access to through its built-in MCP server. ↩︎

AI can indeed do our jobs

Cory Doctorow, the famous sci-fi author who coined Enshittification, recently wrote an article about a future where AI serves us versus where we serve it. In the first case, AI helps us and catches our mistakes, for example as a second opinion on a radiologist's work. In the second, it does the bulk of the work, jobs get lost, and the remaining juniors check its work, but mostly act as a scapegoat when both AI radiologist and junior doctor miss the tumour.

I think there are problems with this view. First off, in many cases, AI is simply better than humans. I don't mean that it's more productive / doesn't get tired etc, but rather that when it comes to spotting tumours, for a narrow use case, it has a lower rate of false positives and false negatives than humans. So I was surprised that he used that example. You can also just replace AI with "software" in many cases (or hardware: a 4-row harvester might not get all potatoes, but its still better than a human with a tiller). If you add in fatigue as well, then it would be crazy to let humans operate heavy machinery or even drive a car, if statistically the roads are safer with AI at the wheel. To add to that, it kind of says the opposite of his first point: shouldn't AI overlords checking your work be the dystopian future, while humans wrangling fleets of AI be the future that puts us in control?

The second issue is around the idea that juniors keep their jobs and expensive, mouthy seniors are the ones getting fired. I can't speak for the medical profession, but at least in software engineering (which he touches on), that is certainly not the case, according to a 2025 Stanford study. Anecdotally, I see this too -- because these models are not (yet) that good, they're equivalent to a highly productive junior to mid-level engineer, and they need a senior to supervise them, just as you would need to supervise junior humans. And when a junior human messes up, you're accountable as their manager, which is as it should be.

I must say though, especially in the past few months, they've gotten better than most humans. They don't really make the kinds of mistakes Cory talks about anymore, so long as you use them properly (e.g. have them run a linter to catch their own syntax errors, make them write and run their own unit tests, etc). The same way you would help a junior developer not make mistakes. It's possible Cory's thinking about the code agents of ~6 months ago, which shouldn't feel like an eternity, but it is in this case! People have already adapted.

The consequence of this is that entry-level jobs are disappearing, and the demand for seniors has actually gone up. I don't say that out of denial (disclaimer: I'm as senior as it gets and the CTO of an AI company). This is because the path to become a senior is suddenly very narrow and there are fewer and fewer future seniors. The only way out is if AI gets good enough, fast enough, to also take the senior role. But for now, companies will fire (or more accurately, not hire) 10 juniors in favour of 1 senior with a Claude Max subscription. I suspect that we will see a lot more solo-founder startups appear as a result of this.

The true risk here is knowledge collapse, where if it doesn't get good enough (or if one day all AI disappeared for some reason), suddenly there's nobody left who can fix the machines that build machines, or the final machines. This happens in less dramatic ways all the time with technology and automation, and sometimes there are specialists left that still know the Old Ways and we don't need to build the knowledge again from first principles.

I agree that of course there's an AI bubble, and it will pop despite the fact that AI is genuinely useful (the same way that the internet is useful, and the dotcom bubble had to pop). However, I don't think we need to do anything to help it along -- it will pop no matter what. In the final two paragraphs Cory tries to explain what it is we need to do to pop the bubble in a way that minimises harm to people. He says we should become aware of the fact that AI can't actually do our jobs. But it can and it is!

SSH client on my G1 smart glasses

This is a demo of how I run an SSH client on my Even Realities G1 smart glasses, as multiple people have asked me. It's a weird look when you're sitting somewhere in public typing on a wireless split keyboard while staring off into space!

I connect my keyboard and my glasses to my phone, and have a small web app that sits in the middle. It can send text and images to the glasses (although the images are a bit janky), and it can capture recorded audio from the glasses, although I'm still on the lookout for a good local speech to text model. Via SSH, I can do a ton of stuff without any extra work needed!

Just a quick disclaimer: I actually regret buying these, as I have many issue with the product and, more importantly, the company. But it was an expensive purchase, so I'm making the best of it!

How I almost worked at Google

As I was sorting through some old bookmarks, I remembered a fun thing that happened over a decade ago now. I was doing some programming and searching some technical things online as I was debugging something, and suddenly the results page of Google tipped back and in the opening there was some text on a dark background that said "You're speaking our language. Up for a challenge?".

I clicked it and it took me to a terminal where I had to solve a series of leetcode-style puzzles. While I'm not a fan of those sorts of questions, I really enjoy Alternate Reality Games (ARGs) and Capture the Flag (CTFs), so this was really up my alley. As you progressed through the levels, the puzzles got harder and harder, eventually having time limits in days rather than minutes or hours. I remember feeling quite proud by figuring out "ah, they want me to use dynamic programming here", etc.

After level 5 if I remember correctly, it asks you for your contact details. I put those in and carried on. Eventually, I got to maybe level 8, and the problem was quite long, and I had a life to get back to, so I let it lapse. I eventually found out that this was also a promotion for the new (at the time) movie called The Imitation Game.

Not long after, I was reached out to by a headhunter from Google. Anyone who knows me knows that I would never in a million years work at Google (or any big tech company for that matter). However, at the time (approx 2014), I didn't really know what I wanted to do. So I ended up interviewing for a SE role at Google.

I remember being surprised at how low-tech their process was -- the tech interview was me on the phone with the interviewer (actual phone, not Skype) and typing code into a Google Doc. I remember it took me a while to figure out the answer to another leetcode-like question about normalising strings and searching through them or something. I called a friend afterwards who actually studied CS (unlike me) and told him about it, and he got the answer instantly.

Eventually, I had a choice between either that or pursuing a PhD. I picked doing a PhD. Sometimes I wonder where my life would be had I started working in industry instead. I know now that I would not have enjoyed working at Google, but then again, I really did not enjoy my PhD! So that's the story how I was almost started a career in big tech.

The link (https://foobar.withgoogle.com/) is no longer active and redirects to Google's jobs page, however something similar seems to live at https://h4ck1ng.google/, though that looks very different to what I remember. It still has a similar vibe though!

Building my first keyboard

NB: I wrote most of this post in March 2024, but felt like I had a lot more to say, so kept it in my drafts until now. I don't actually remember what more I wanted to say, and a lot of the things I learned in the process are now redundant! So did a bit of editing and published it now, almost 2 years later. Everything after "The Build" is recent.

There's something quite different about this post. I'm typing much slower than normal. The reason for that is that I'm not used to the keyboard that I'm typing on. That's right folks, I finally did it. I built my first keyboard! And what a journey it's been!

There's a large and active community of keyboard enthusiasts, and I could not have learned as much as I have without them (especially the ZMK Discord server), so part of why I'm writing this is to document my learnings. I could write several posts to dig a bit deeper into individual aspects, but for now I'll just summarise my journey up to now while getting some typing practice (frankly, programming is going to be a bit harder compared to writing English prose). This has all been over the course of many weeks, on and off, so bear that in mind.

Keyboards

For a while, I had been using a cheap bluetooth keyboard off of Amazon. This served me well with my setup (I'll write more about that separately, since it's unusual and people have asked me about it). I then switched to a cheap folding BT keyboard with a trackpad and multiple BT profiles, and frankly it was a bit of a downgrade. I wanted something that was clean, portable, and efficient.

I had come across futuristic takes on keyboards and fancy ergonomic keyboards, via friends and research, all with large price tags. Some examples are:

- The Tap Strap 2

- The CharaChorder

- The Twiddler 4

- The Moonlander

- The Ergodox

- The Ultimate Hacking Keyboard

- and quite a few more

Most of these are programmable and you can flash the keyboard controllers usually with QMK or ZMK firmware. There are some differences between the two, most notably that QMK is easier to use and has better support for mouse emulation, while ZMK is open source and has better support for wireless (especially split wireless) keyboards.

What does it mean to build one?

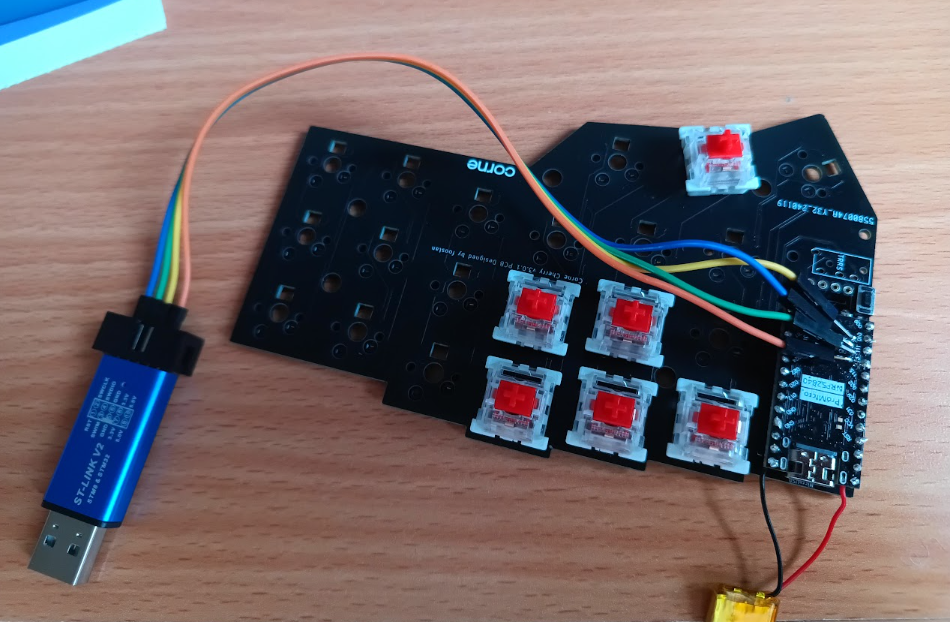

You're probably not going to design your own PCB, although I'd like to do this one day, as the PCB I'm using is open source. The rest is quite doable with basic electronics and soldering skills. It looked a bit intimidating to me from the outside since I didn't understand how the different components worked together, but it's possible to get kits. Since I didn't want to spend more that £100 to break into this hobby, I made things a bit harder on myself than they needed to be (and ended up spending more than that anyway, but it was worth it for the learning).

I wanted to build a wireless split keyboard, so some of this is specific to that, but it's not hugely different for normal keyboards. You need:

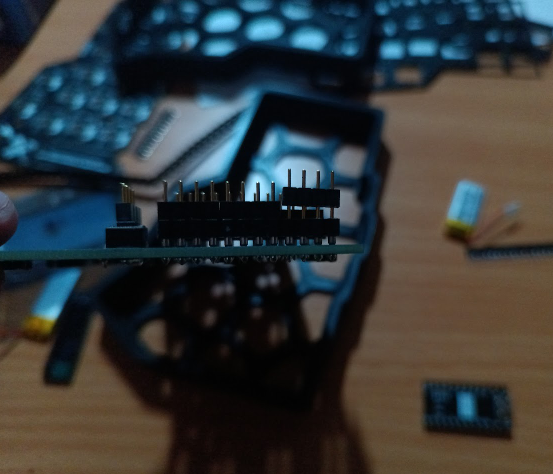

- A PCB

- Sockets and diodes

- Make sure that the kind of sockets you get fit into the board and match your switches

- Switches

- MX switches -- most common and easiest to find keycaps for, but chunkier

- Choc switches -- lower profile but newer and less common, so harder to find keycaps for, although Choc v2 is MX-compatible at the cost of footprint

- Key caps

- There is a whole industry of wild and wonderful styles and colours of caps (especially MX caps as mentioned) and I often see Etsy artists making custom resin key caps. For my first build however, I harvested these off of an older, normal mechanical keyboard.

- Controllers

- The most typical controller for split wireless keyboards is the nice!nano. I'm using a cheap clone of this off of AliExpress called the SuperMini NRF52840. This did cause some problems.

- The most typical controller for split wireless keyboards is the nice!nano. I'm using a cheap clone of this off of AliExpress called the SuperMini NRF52840. This did cause some problems.

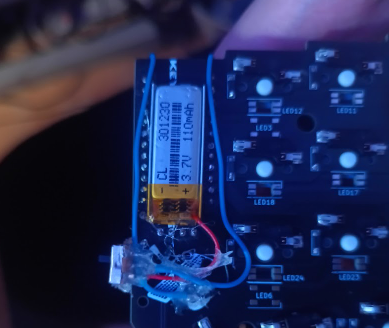

- Battery

- Cheap AliExpress batteries will do. I also had a box of on/off switches and I glue-gunned one to each side so I can turn everything off when I want to.

- Cheap AliExpress batteries will do. I also had a box of on/off switches and I glue-gunned one to each side so I can turn everything off when I want to.

- Display (optional)

- This is optional, but common and useful. The nice!nano has a compatible display called the nice!view, but you can also use generic OLEDs (which is what I did). It could be that my build is not as battery-efficient as the brand one.

- Case

- I opted for 3D printing from a case someone else made, but you can also use laser-cut acrylic for a thinner profile.

The build

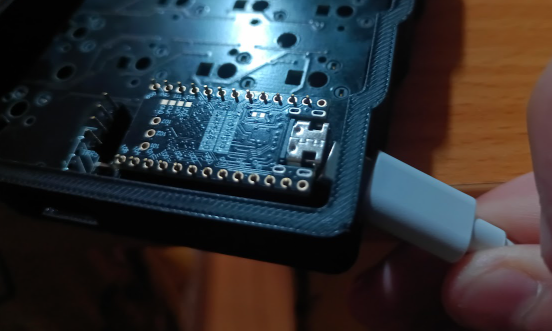

I considered documenting every step of the way here, but let's fast forward! All soldered and assembled, this is what I use:

For the most part, the building was easier than I thought. I think I only really had two main problems. The first one, and biggest slowdown, were that my controllers came broken. This was a whole bit rabbit hole of troubleshooting, and I even bought a special in-circuit debugger/programmer, but long story short, the controllers just wouldn't pair with each other.

Normally, the left side is the master (and connects to your actual PC or phone) while the right side is the slave. The correct terms are "central" and "peripheral". This is not uncommon, and there are troubleshooting instructions, but eventually I narrowed it down to a hardware issue. The moment my replacements arrived, I flashed them and they worked right out of the box.

My second problem was soldering things on and off, because of the above situation and also mistakes I made. I wasn't really following a guide since I didn't order a kit, just the parts, so I was winging it somewhat. My controllers were at an odd height if I soldered them to removable pins, not aligning with the holes in the 3D-printed case, so I soldered them straight onto the board in the end, which was too permanent!

This is the point at which I finally upgraded my soldering gear and got more tools. I'd always been able to get away with the simplest iron (plug it straight in and nothing else), but now I had a reason to get solder remover, a sucker, an iron with adjustable temperature, etc, all in a nice set off AliExpress again. This was one of the budget killers in theory!

Key maps

So the hardware is done, now it's time to program it! At the time, I thought it was so convenient how you flash firmware onto it. No need for special hardware for flashing, or worrying about accidentally bricking it. It just shows up as a USB drive on your PC and you drag the .uf2 files into it. That's it!

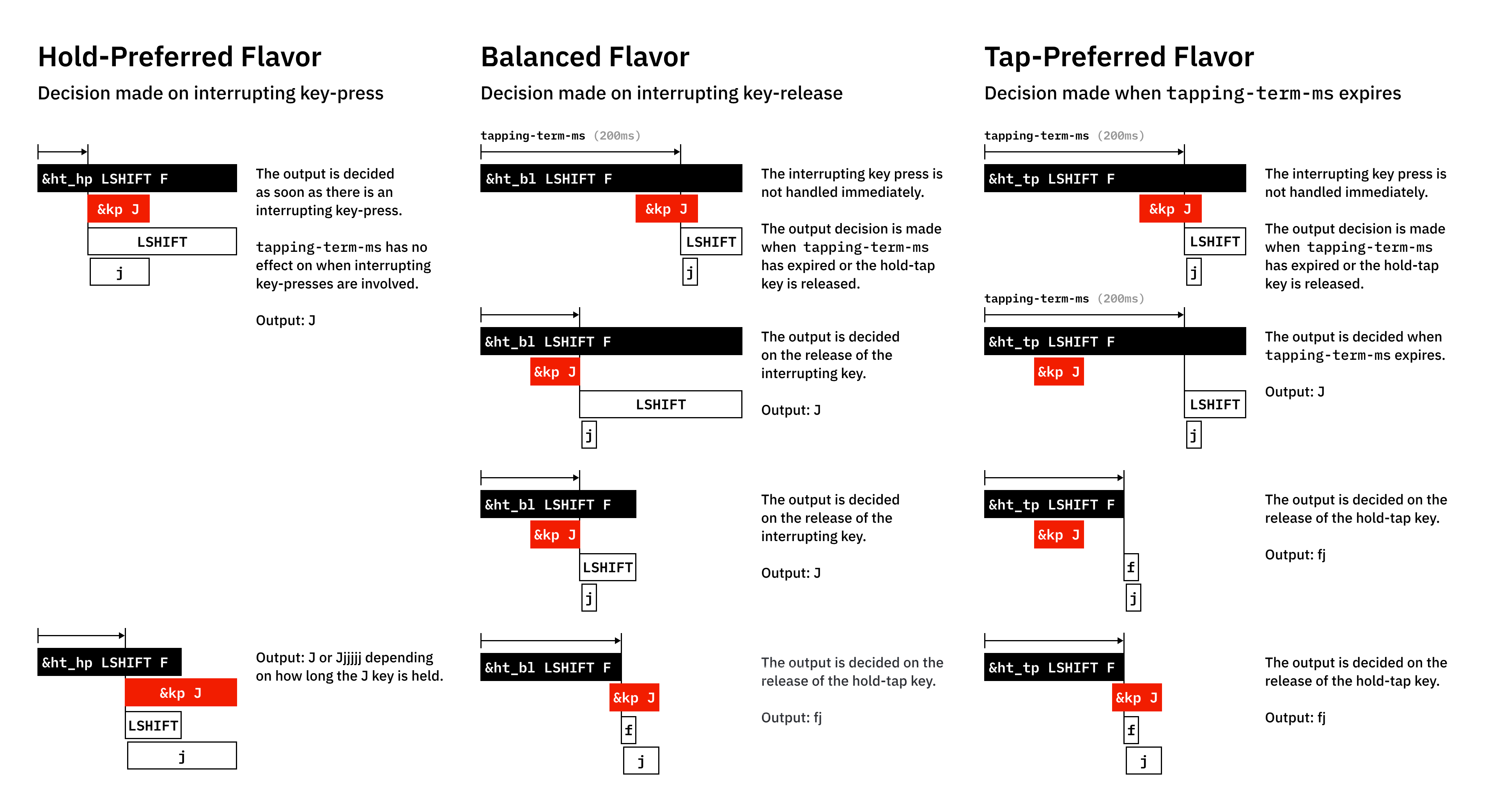

But now it's become even easier as you can use https://zmk.studio/, which I haven't gotten around to trying yet. I instead used a tool called ZMK Configurator which has a nice UI and builds in GitHub actions. ZMK also has a notion of "layers" that are a bit like pressing shift, except you can change your whole layout. You can also have keys that switch to every device simultaneously connected to the same keyboard via Bluetooth. It's surprisingly robust!

A friend pointed out that these days (unlike 2 years ago when I was doing this), you can just ask Claude Code to edit your config for you. You can find my full config here on GitHub. This is especially useful as ZMK can do some pretty crazy things! E.g. look at all the variations on just one aspect of hold-tap behaviour!

Some customisations I've done to my key map over time that I really like. I'm a big fan of mod-morph:

- Workspace switching via mod-morph: The top row (Q-P) becomes numbers 1-0 when holding GUI/Super, sending

Super+Ndirectly. This avoids the awkwardSuper+layer+numbercombination since numbers are normally on a different layer. - Top-left key is Esc, Ctrl+Tab, or Super+Q: This is done with chained mod-morphs. It's Esc by default, but when you press it with Ctrl, it acts like Ctrl+Tab (e.g. for cycling browser tabs, and you can do Shift+Ctrl+that key to go backwards). When you press it with Super, it sends Super+Q (e.g. for quitting windows). I needed this because I can no longer actually press Super+Q the normal way because of the point above.

- Ctrl/Tab hold-tap: The key below that is Ctrl when held with another key, but Tab when tapped alone (200ms tapping term).

- Backspace/Delete mod-morph: my top right key is Backspace normally, but Delete when holding GUI.

- Enter on bottom-right: The default enter position in the thumb cluster is crazy to me, and I kept sending messages before I was done typing them. So I moved it to the bottom right at pinky position, similar to a normal keyboard.

- HJKL arrow keys: I'm a vim user, so this was a no-brainer. When I switch to the lower layer, my HJKL keys become arrow keys.

- Media controls: Volume, mute, and playback controls on the lower layer's bottom row.

- Bluetooth device switching: Lower layer includes BT_CLR and BT_SEL 0-4 for managing up to 5 paired devices. I really never need to have more than 5 devices paired to my keyboard at the same time!

One thing I've alluded to but not mentioned is my old "Work from Phone" setup. I'll write about this in more detail soon, but the important bit here is that Android likes to steal your Super key for Android-y things like taking you to the home screen. To get around this, at the time, I used a tool called ExKeyMo to remap my keys Android-side. But nowadays, you can actually configure Android to send Left-Alt instead of Super via native settings. What this meant is that I can keep my ZMK mappings normal, and simply and run this on my PC to make it treat Left-Alt like Super:

xmodmap -e "remove mod1 = Alt_L"

xmodmap -e "add mod4 = Alt_L"Now, when I switch desktop workspaces via a remote desktop app, it doesn't do weird things like switching Android apps!

Two years later

Spoiler alert: I've built a second Corne keyboard in the meantime! More on that soon. However, I've become very fast at using this keyboard in the meantime, and I carry it around everywhere. I now actually struggle to use a normal keyboard, but that's fine by me.

Life of a pigeon

These holes inside the giant nests,

I try to fly right through,

and then I'm on the ground...

oh no, what did I do?

I huddle in the corner,

the giants find me there.

They take me inside into their nest,

much warmer is the air.

Three times sleeping later,

still dazed in my new coop.

I don't know how to fly away,

and yet I eat and poop!

Vets and RSPCA,

they want to put me down.

"No!" the giants yell,

"you can't!", they say and frown.

Oxfordshire Wildlife Rescue;

to them I'm not a pest!

A lady comes and takes me,

to health I can now rest.

3D printer enjoyer club

I have so many things to write about, and I might do it over this holiday period, but the biggest news right now is that a very powerful tool has just joined my arsenal! I'm now the proud owner of a Creality Ender-3 V3 SE! That mouthful is a 3D printer. This bad boy which sits behind me beneath my shelves.

As is the traditional rite of passage, I joined the club with the Hello World of 3D models: my very own 3DBenchy!

No longer do I need to go all the way to the hackspace on the other side of town. Watch this space!

Neigh-bours

You often need supports for plants to grow along, which is sometimes a bamboo stick. Well, how about we grow our own?

Bamboo is the fastest growing plant in the world I believe, so it will be interesting to document this. But don't be mistaken -- this is not bamboo! It's Rough Horsetail and I got it from a car boot sale, along with other neat things. However, it's quite similar and, like bamboo, it's an invasive species, can aggressively spread into neighbour gardens, and be quite difficult to permanently remove. So I'll be growing it in pots either way for my permanent supply of sticks!

A pot

Tree distraught.

Not feeling so hot.

Wind tipped it, not caught.

Tree needs a real plot.

A pot!

A pot I sought.

A pot I got.

Filled with soil I'd bought.

Lo! Size I forgot.

The space is a lot,

but stay small it will not.

"Tree will thrive", so I thought.

Breaking ground

Every morning, I eat a green Granny Smith apple. This is not only my favourite apple, but probably the only apple I actually like. It would be amazing if I could do this self-sufficiently, 365 apples a year!

I'm delighted to announce that, as of a couple days ago, I'm the proud owner of a Granny Smith apple tree from an orchard in Norfolk.

My variety is a "mini" tree that grows to 180cm (so as tall as me) from the M27 stock, which is ideal for a small garden like mine and can be grown in a pot too. I also got a bunch of pots and soil for this and other exciting things I'll write about soon!

Greenhouse effect

I moved houses recently. The new place has a conservatory and an outside space. I wouldn't quite call it a garden, as it's paved, however I do intend to get some flower beds for it. I think this will be great for my horticultural endeavours.

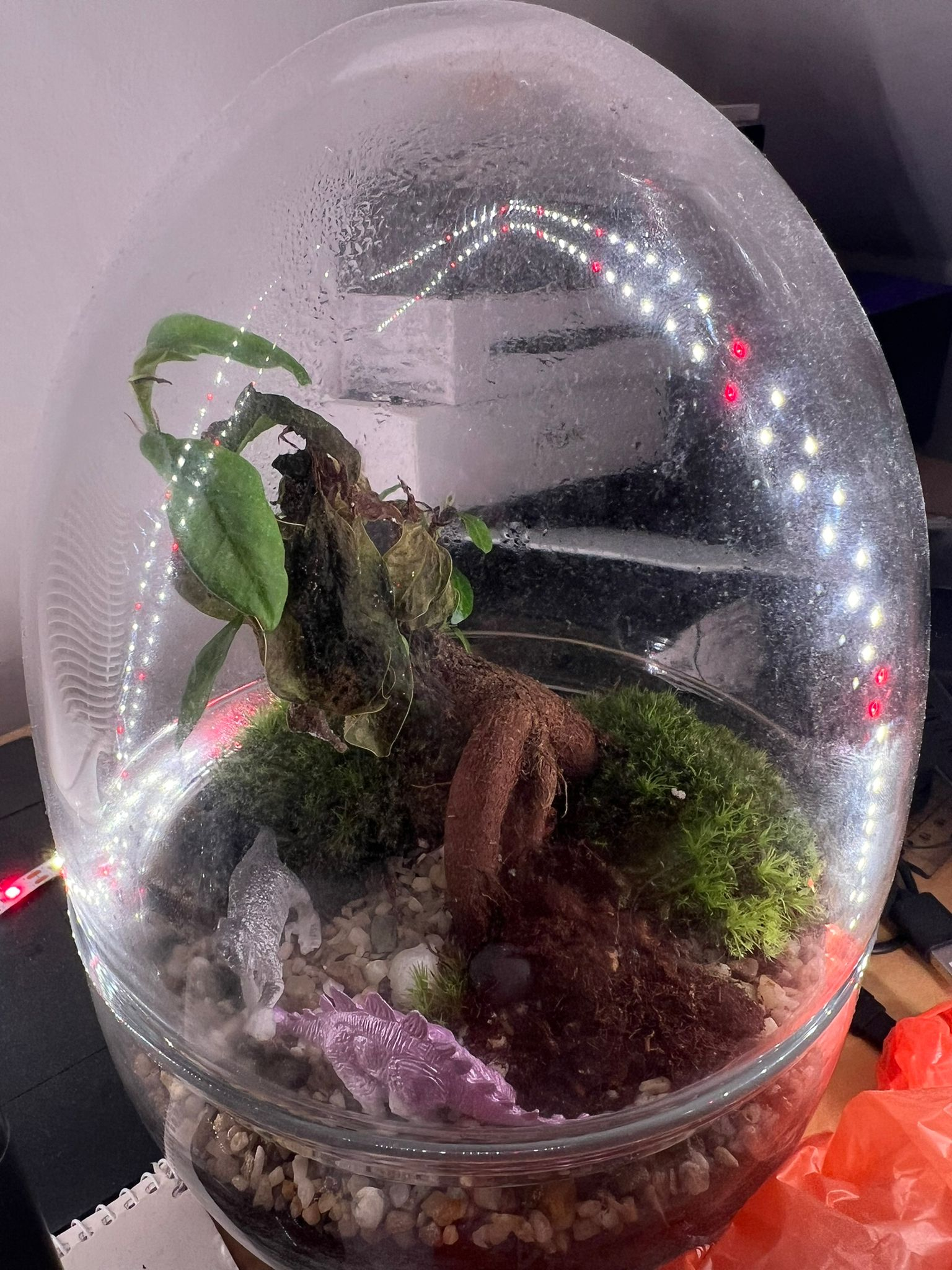

The most sensitive items I carried over to the new place in my arms. The first time was with the Luton van drivers in the front, where I carried my bonsai plant. The second time was the final trip over, where I carried Jinn and the ant colony.

My bonsai plant had made it in one piece, and I was glad. However, I then made the mistake of putting it in the conservatory. I did not realise just how hot it got in there. The next day, I found that the leaves on my bonsai plant had gone completely brown and shrivelled.

The glass was extremely hot to the touch — almost scalding. I realised I’d essentially cooked the plant. The moss had turned white. I immediately moved it to the cooler kitchen and rehydrated it by adding water.

I think it can bounce back over time, but this definitely is a setback. I'm very glad I didn't move the ants there.

The only printer in Gatwick Airport

If you ask anyone who works at Gatwick Airport, including the information desk, they'll tell you there's nowhere where you can get something printed there. I'm writing this post to inform the internet that that's not true. If you want to know where the printer is, skip to the end. Otherwise, here's my story.

I normally travel to Egypt without a visa, as I'm able to convince the border control that I was once Egyptian. People of Egyptian origin don't need a visa in theory, although sometimes an officer will insist that you still do, and you need to talk your way out of it. They like to power trip, so you need to be quite friendly and smooth so they can let you through "just this once, but get the visa next time". This can be quite stressful actually, but I'm just too cheap to pay for a visa.

The way I can convince the border control is:

- I have loads of Egypt stamps in my passport, and no visa anywhere in it, so there's precedent that I got in before without one

- I speak Egyptian Arabic and drop in some local slang (though if I talk too much it becomes obvious it's not my first language, so gotta balance it)

- I have a printed copy of a photo of my birth certificate. This is quite old (hand-written) and there are a lot of bureaucratic blockers to getting it renewed to the computerised one, but it helps to show

Without that third bit of evidence, my case is quite hard to prove. I also can't just show it on my phone -- having it printed adds to the legitimacy. Not too long ago, I found myself at Gatwick airport going to Egypt, and realised I had forgotten that physical copy. I only had the WhatsApp image my mother had sent me long ago. I needed a printer.

I was early for my flight so I went and asked the information desk, to no avail. They said there's nowhere that could have a printer that travellers could use. I didn't believe them, so I first went and checked the Boots and the WH Smith etc. You never know what store might have a printer and charitable staff, especially a pharmacy. But unfortunately no dice.

Then I thought that the most obvious next place must be the airport lounges; surely they must have a business centre with a printer? I was flying economy, and not about to pay for lounge access, but I thought I'd try asking staff again to just let me in to print one thing and leave. Unfortunately, it seemed like literally none of the lounges had that!

As I was about to give up, I approached the final lounge in the upstairs of the lounge area. I asked the man at the counter if they have printers, and he said that they offer a printing service, for exactly one pound. Music to my ears! I left the queue and emailed him the image, and he printed it right there and then. It was all stretched and cut off, so I asked him to do it again and helped him get the print settings right (he didn't charge me the second time).

With my document in hand, I went back to the info desk and when they saw me approach with that sheet of paper in hand they were in shock! They said so many people ask them this and they always say that there's nowhere to print, so they were very grateful that I could update their information on this. Never give up until you've turned over every stone!

So where is the printer in Gatwick Airport? It's at the counter of Plaza Premium Lounge. I even found a photo online -- it's behind the counter where I put the red rectangle!

Build smaller agents that ask for help

Disclaimer: I originally wrote this post a few months ago on a flight, and decided not to publish it at the time. I did so as I have a rule that when 3 or more people ask me the same question, I write my answer down. I changed my mind now and decided to publish, but things move fast in this space, so bear that in mind.

More and more the topic of Agents with unlimited tools comes up. It came up again as Anthropic is trying to set a standard for interfaces to tools through MCP. It has come up enough times now that I thought I'd write down my thoughts, so I can send this to people the next time it comes up. I actually think MCP is quite a badly designed protocol, but this post will not be about that. Instead, we'll go a bit more high-level.

What is an agent?

In the context of LLMs, it's a prompt or loop of prompts with access to tools (e.g. a function that checks the weather). Usually this looping is to give it a way to reason about what tools to use and continue acting based on the results of these tools.

I would therefore break down the things an agent needs to be able to do into 3+1 steps:

- Reason about which tools to use given a task

- Reason about what parameters to pass to those tools

- Call the tools with those parameters and optionally splice the results into the prompt or follow-up prompt

- Rinse and repeat for as many times as are necessary

For example, agent might have access to a weather function, a contact list function, and an email function. I ask "send today's weather to Bob". It reasons that it must first query the weather and the contact list, then using the results from those, it calls the email function with a generated message.

Why can't this scale?

The short answer is: step #1. A human analogue might be analysis paralysis. If you have one tool, you only need to decide if you should use it or not (let's ignore repeat usage of the same tool or else the possible combinations are infinite). If you have two, that decision is A, B, AB, BA. The combinations explode factorially as the number of tools increase.

Another analogue is Chekhov's gun. This is sort of like the reverse of the saying "if all you have is a hammer, everything looks like a nail". Agents have a proclivity to use certain tools by virtue of their mere existence. Have you ever had to ask GPT to do something but "do not search the internet"? Then you'll know what I mean.

Not to mention the huge attack surface you create with big tool libraries and data sources through indirect prompt injection and other means. These attacks are impossible to stop except with the brute force of very large eval datasets.

A common approach to mitigate these issues is to first have a step where you filter for only the relevant tools for a task (this can be an embedding search) and then give the agent access to only a subset of those tools. It's like composing a specialist agent on the fly.

This is not how humans think. Humans break tasks down into more and more granular nested pieces. Their functions unfold to include even more reasoning and even more functions; they don't start at the "leaf" of the reasoning tree. You don't assemble your final sculpture from individual Legos, you perform a functional decomposition first.

Not just that, but you're constraining any creativity in the reasoning of how to handle a task. There's also more that can go wrong -- how do I know that my tool search pass for the above query will bring back the contacts list tool? I would need to annotate my tools with information on possible use cases that I might not yet know about, or generate hypothetical tools (cf HyDE) to try and improve the search.

How can I possibly know that my weather querying tool could be part of not just a bigger orchestra, but potentially completely different symphonies? It could be part of a shipment planning task (itself part of a bigger task) or making a sales email start with "Hope you're enjoying the nice weather in Brighton!". Perhaps both of these are simultaneously used in an "increase revenue" task.

Let's take a step back...

Why would you even want these agents with unlimited tools in the first place? People will say it's so that their agents can be smarter and do more. But what do they actually want them to do?

The crux of the issue is in the generality of the task, not the reasoning of which tools help you achieve that task. Agents that perform better (not that any are that good mind you) will have more prompting to break down the problem into simpler ones (incl CoT) and create a high level strategy.

Agents that perform the best are specialised to a very narrow task. Just like in a business, a generalist solo founder might be able to do ok at most business functions, but really you want a team that can specialise and complement each other.

What then?

Agents with access to infinite tools are hard to evaluate and therefore hard to improve. Specialist agents are not. In many cases, these agents could be mostly traditional software, with the LLM merely deciding if a single function should be called or not, and with what parameters.

There's a much more intuitive affordance here that we can use: the hierarchical organisation! What does a CEO do when they want a product built? They communicate with their Head of Product and Head of Engineering perhaps. They do not need to think about any fundamental functions like writing some code. Even the Head of Engineering will delegate to someone else who decomposes the problem down further and further.

The number of decisions that one person needs to make is constrained quite naturally, just as it is for agents. You can't have a completely flat company with thousands of employees that you pick a few out ad-hoc for any given task.

Why is this easier?

The hardest part about building complicated automation with AI is defining what right and wrong behaviour is. With LLMs, this can sometimes come down to the right prompt. Writing the playbook for how a Head of Engineering should behave is much easier if you ask a human Head of Engineering, than it is to define the behaviour of this omniscient octopus agent with access to every tool. Who're you going to ask, God?

The administration or routing job should be separate from the executor job. It's a different task so should have its own agent. This is kind of similar to a level down with Mixture of Experts models -- there's a gate network or router that is responsible for figuring out which tokens go to which expert. So why don't we do something similar at the agent level? Why go for a single omniscient expert?

What if you're building a fusion reactor?

You cannot build a particle physicists agent without an actual particle physicist to align it. So what do you do? Why would a particle physicist come to you, help you build this agent, and make themselves redundant?

They don't need to! Just as when you hire someone, you don't suddenly own their brain, similarly the physicist can encode their knowledge into an agent (or system of agents) and your organisation can hire them. They own their IP.

At an architectural level, these agents are inserted at the right layer of your organisation and speak the same language on this inter-agent message bus.

The language: plain text

We've established why Anthropic has got it wrong. A Zapier/Fivetran for tools is useless. That's not the hard part. Interface standards should not be at the function level, but at the communication level. And what better standard than plain text? That's what the LLMs are good at, natural language! What does this remind you of? The Unix Philosophy!

Write programs that do one thing and do it well. Write programs to work together. Write programs that handle text streams, because that is a universal interface.

How about we replace "programs" with "agents"? And guess what: humans are compatible with plain text too! An agent can send a plain text chat message, email, or dashboard notification to a human just as it can another agent. So that physicist could totally be a human in an AI world. Or perhaps the AI wants to hire a captcha solver? Let's leave that decision to our Recruiter agent -- it was aligned by a human recruiter so it knows what it's doing.

A society of agents

I'm not about to suggest that we take the hierarchy all the way up to President. Rather, I think there's an interesting space, similar to the "labour pool" above, for autonomous B2B commerce between agents. I haven't been able to get this idea out of my head ever since I read Accelerando, one of the few fiction books I've read several times. In it, you have autonomous corporations performing complicated manoeuvres at the speed of light.

I can see a glimpse of this at the global "message bus" layer of interaction above the top-level agent, with other top-level agents. If you blur the lines between employment and professional services, you can imagine a supplier sitting in your org chart, or you can imagine them external to your org, with a contact person communicating with them (again, not by API but by e.g. email).

Conclusion

For this space of general tasks like "build me a profitable business", the biggest problem an agent faces is the "how". The quality of the reasoning degrades as the tasks become broader. By allowing as many agents as needed to break the problem down for us, we limit the amount of reasoning an agent needs to do at any given point. This is why we should anthropomorphise AI agents more -- they're like us!

Bus Factor 2

When I was in uni, one of my engineering lecturers would grade us based on the quality of our lab books. He would insist that it is vital you write down everything you do such that if you were hit by a bus, a competent engineer could inherit your lab book and seamlessly pick up where you left off. He taught us the term "Bus Factor", which is the number of engineers that would have to disappear for a project to stall. When you work on a project solo, you're a single point of failure, so your lab book should act the vehicle for knowledge transfer. The higher the bus factor, the better!

Later, in my journey as a founder, I came across Reed Hastings' thoughts on team building at Netflix. To maintain a high talent density, the average talent should only go up with each addition to your team, which in turn attracts even more talented people who want to be surrounded by the best. Reed coined the "Keeper Test" where you ask yourself if you would fight to keep a team member leaving to go to another job. I instead consider this loosely equivalent to imagining how screwed you'd be if a member of your team got hit by a bus. If it would be a disaster, they pass the bar and should be retained. If not, they should be working elsewhere; you're a professional sports team, not a family.

I agree with both philosophies, but you can see how they would be difficult to reconcile. Do you build a resilient team, or one with zero redundancy? Surely a team of irreplaceable players is a fragile one?

As always the optimum lies somewhere in the middle. You do not want to build a culture where nobody can take a holiday without the company grinding to a halt. And similarly, you do not want to have fungible members on your team warming the bench.

I believe the way out if this conundrum is to have overlapping secondary skills. If your startup is small (<10 people) the magic number is Bus Factor 2. At any given point in time, two people should be able to perform the same role in your business. This doesn't mean hire like you're Noah, but rather make sure that every person on your team has a backup (in skill if not capacity). It's a continuous process; keep others filled in on what you're doing and write clear documentation that can fill them in more in your absence. If possible, whenever you name a project owner, also name their backup or shadow.

The backup might not be as good (e.g. maybe a back-end dev can do a bit of front-end if needed) but this at least means that tasks under a certain role won't be hard blocked the day the primary team member is unavailable. It's ok if it hurts to have your defender play goalie, that means the goalie passes the keeper test (I swear no pun intended!).

Hire T-shaped people in a startup as their generalism will allow your business to still run smoothly without a full complement temporarily, like a melted RAID array before you save it. But nobody should ever be twiddling their thumbs because they're focusing on their spike and not a spare wheel -- never have two goalies! Or worse: a goalie that's worse at being a goalie than your defender.

With that out of the way, time for some pontification! How does this relate to making customers deeply dependent on your product or service? Superficially, this sounds like a good thing: job security and building something people need! Notice I said "need" and not "want". On reflection, taken at face value, this leads to perverse incentives.

On a macro scale, this is how you get monopolies and highly inelastic goods/services. In the long term, this leads to technological stagnation and inefficiency, and in the short term businesses can engage in price gouging and other unethical practices. No competition, no innovation.

On a micro scale, I want to provide goods/services that people buy because it's truly their best option, not because they're forced to. Just as I wouldn't want to sell something because I had no other option and needed the money. I also want to have the option to cut a bad-fit client/customer loose without the moral weight of condemning them to bankruptcy. The power balance between buyer and seller should be such that a genuinely fair arrangement/price can be reached. The market usually does a good job of making things fair.

You can see how this relates to employees; you're still just exchanging money for services. If you cannot afford to lose your job and have no union, your employer can exploit you. If your company cannot survive without a key person, the power dynamic is reversed. Even if the relationship is amicable, imagine not being able to leave a struggling startup for greener pastures because you know that doing so would send it to the grave along with the livelihoods of the rest of the team. Or creating busywork to avoid firing your friends. Not a nice spot to be in, so try and keep the power balanced!

By giving customers an easy way to migrate off of your product/service, not only will they trust you more, but you'll know that they're staying with you for the right reasons and you're actually giving them something they want. If you try and lock them in with dirty tactics (e.g. a Walled Garden ecosystem, predatory contracts, high migration or knowledge transfer cost, etc) you will fail in the long term, because they'll jump ship the moment they can elope with a less toxic spouse. This is how incumbents get killed.

It's not enough to avoid these tactics, you also need to take steps to allow them to stop working with you if they need to. E.g. the ability to export their data in a standard format, or well-written documentation on what you've built for them.

As always, independence is a virtue not just for yourself, but to grant to others as well.

Advice on applying for UK citizenship

Towards the end of October 2024, my application for UK citizenship was approved. I first moved to the UK 14 years before that, in October 2010. I didn't stay in the UK permanently since that date, but overall I've probably spent more time in the UK than anywhere else.

Me at the ceremony with the Honorary Alderman

Me at the ceremony with the Honorary Alderman

Arguably I didn't really need UK citizenship. I already had Settled Status under the EU Settlement Scheme, having lived in the UK before Brexit, which meant I already had most of the privileges of being a UK citizen (besides voting on parliamentary elections) provided I don't leave the UK for too long.

In fact, once I was actually able to apply by UK law, I was no longer able to apply by German law. German law stipulated that you cannot have dual nationality with a non-EU country (barring exceptional circumstances), and I didn't qualify pre-Brexit. This is why I had to give up my Egyptian citizenship to get the German one. But in June 2024, that law changed.

Do it as soon as you can

The first thing you'll find is that it's expensive!! Put aside £2k for this whole exercise. On paper, the most expensive part is the actual application, which was just over £1.6k when I applied, up from £1.2k the year before and seems to be rapidly increasing every year. You can get away with not paying much more for the remaining parts:

- £50 for the test attempt that you pass the first time

- £10 for passport photos

- £95 for passport application

- £5 for postage-related stuff

- £10 to commute to different places

- £0 if you pick a bad biometrics and ceremony slot (but you may need to take time off work, so there's an opportunity cost)

You probably don't need to buy the Life in the UK books. If you're an immigrant like I am, you probably have immigrant friends, and it's extremely likely that one of them already has the books from a previous year. I got mine for free that way and then gave them to the next person for free.

You're not allowed to take photos at the citizenship ceremony (though we snuck a few), but they have a professional photographer you can pay. In my opinion, these photos are nice and you should pay for them. I think you wan't to put another £100 or so aside for that.

Do not pay for a private ceremony, it's an absolute farce, just do the public group ceremonies like the rest of us. Feel like one of the people!

So the sooner you do this, the less things will cost. In my opinion, it's worth it, as a passport is a powerful thing, and you ideally want to have your eggs in many baskets and to not be beholden to a single government.

Study enough for the test

People get really hung up about the Life in the UK test. I know it's stupid, but it's a necessary medicine you need to swallow, so just do it and stop complaining. It's really not that big of a deal. It's more inconvenient to have to find the time to commute to a testing centre in the middle of nowhere.

Remember that you only need to get 75%, this is not a university entrance exam, so don't over-egg it. You don't need the latest editions of the books and study guide, use a set from the year before. The changes are minimal (in mine, the only major difference was that the queen had died since it was published).

Read the books once back-to-back, then do practice questions. I used this app (unofficial, free) to practice questions. There are many like it. I already do Duolingo daily, so I habit chained doing at least one test per day with these. Very quickly you start remembering the dates and the tricky questions.

Do not try to memorise all the dates and kings and poets etc. For me this was the most difficult part, as I don't have good rote memorisation skills, and the questions are often misleading (e.g. 1814 or 1815 or 1915 or 1951) so you would have to rely on accurate mnemonics.

The trick is that you only need 75%, so just memorise the easy stuff. You get 45 minutes in the exam, but I was out in 4 minutes. The reason for this is because multiple-choice test lend themselves well to exam strategies. What I did was first answer all the questions I was confident about (you can skip questions and come back to them later). These were the ones asking if forced marriage is allowed etc. I skipped the ones that I struggle to remember, like how many seats there are in the Scottish parliament.

Then, I counted the ones I answered, and divided it by the total number of questions. It was over 75%. So I guessed the rest and walked out. 10 minutes later, I got the email saying I passed.

I was also prepared to fail this exam, as spending another £50 a few weeks later to retry was less hassle for me than to study harder with diminishing returns, but that may be different for you if £50 is hard to come by.

Have British friends

You need 2 people who have known you for 3 years to agree to be your referees. I found this quite awkward as I don't like asking people to do me a favour like this. While they both need to be UK residents, I think only one of them needs to be British (I got 2 Brits just in case). It also made me realise just how many of my long-term friends are not British. Make sure you have British friends

The instructions they gave for the form are extremely silly. I needed to print and bring the physical form to my friends to have them sign the underside of the passport photo before I glue it etc. It doesn't make sense. Yes it's just more hurdles. But I found that it's actually ok to half-ass this.

When you get to the biometrics appointment, they don't care about any of that. They'll scan the form and not check anything. You might as well do the whole thing digitally and print it.

If you can, pick friends who haven't moved a lot, to make things easier for them, as you need a lot of personal information from them (e.g. their address history). Go through the whole form first and ask them for everything all at once, once they've agreed, as it's embarrassing to have to go back and be like "sorry, I also need X".

My friends have said they have not received any phone calls regarding me. So it's likely just yet another hoop to jump through.

Application shortcuts

I've heard that applications get rejected if your travel history is not accurate. I don't know if this is true, but it hasn't happened to me. I got a bit concerned after mine took longer than I expected, so I called to ask about it, but they said that there can be a lot of variance in the turnaround time for applications.

However, if you travel as much as I do, it would be a nightmare to have to figure out all the times you left the country for more than 2 days. There's a shortcut! Request the data from the Home Office here. Give them all your passport numbers, and in a few weeks (sometimes longer, but I was lucky), they'll send you a PDF with every single flight you took in and out of the country.

All I had to do then was go through the list and copy it into the form. You need to put a reason -- I think it's enough granularity to say "business" or "vacation", but I wrote things like "visiting family". It was fortunately quite easy for me to remember what the reason was based on the country.

This is how you shorten the most time-consuming part of the application. After that, just answer the remaining questions sensibly.

Passport application

This one can be a bit annoying, as you have to send them physical original copies of everything. They will send it back with Royal Mail who absolutely do not care about your stuff and will wreck it. Since I wanted them to include my Dr title in the passport, I had to ask my mum to send me my original degree certificate from Germany. Spoiler alert: they don't put it as a title like in German passports, they simply type on the opposite page (in an amateurish way) "The holder of this passport has the title of doctor" or something like that.

Anyway, get your padded envelope and send everything off with first class delivery. They'll send it back in a flimsy envelope. In mine, they didn't send back the cardboard I had put in, nor the original passport photos, nor some extra bits (e.g. I had included the original envelope of the naturalisation certificate which contained some welcome docs. Gone). I didn't mind as I got all the important stuff back undamaged.

Veronica wasn't so lucky and they crumpled her naturalisation certificate when she did it. That document is quite expensive to replace, and easy to void, so she complained and fortunately eventually they agreed to replace it for her. It's really quite a hassle though, so if you can, put a cardboard envelope inside the padded envelope you send, and if you're lucky they'll send it back with it. I even put instructions on post-it notes inside, but I think they're probably just very careless at the passport office.

If they damage your naturalisation certificate in any way complain loudly. Call them and tell them that you need it replaced. Channel your inner Karen.

For the passport photos, there are booths everywhere in tube stations, just go to one with a curtain and good lighting. Some of them have an adjustable stool -- those are the best so you don't have to contort yourself.

Alien invader prongs

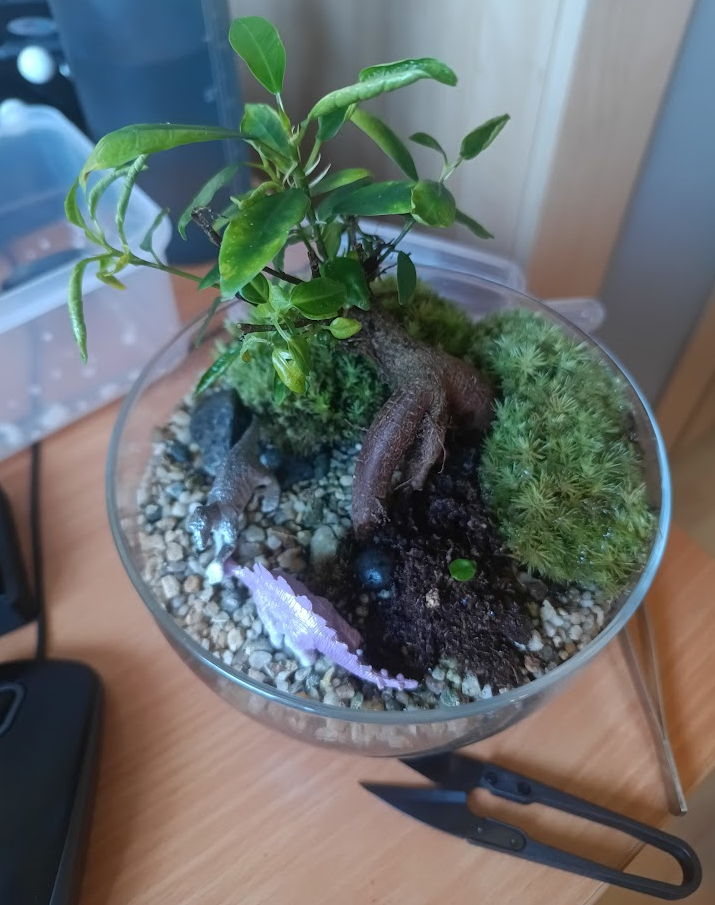

The ficus has been a bit thirsty, as you can tell by the leaves that have shrivelled slightly:

So I thought it's high time to first clean up the dead leaves, and also add water to the terrarium. The moss too seemed to be very dry (and I trimmed some of the long freaky moss prongs). I accidentally cut a little leaf, so I put it back into the soil, however it seems to be immune to decomposition:

This was all mid-September, and I was a tiny bit freaked out by these additional weird prongs:

Then, a little over a week ago, it started looking even more alien. The leaves were ok now (some browning?) but now these prongs looked sort of fungal. Don't like it.

A photo from today; that alien prong has grown further and is reaching the ground. I think I need to snip it before it consumes the terrarium!

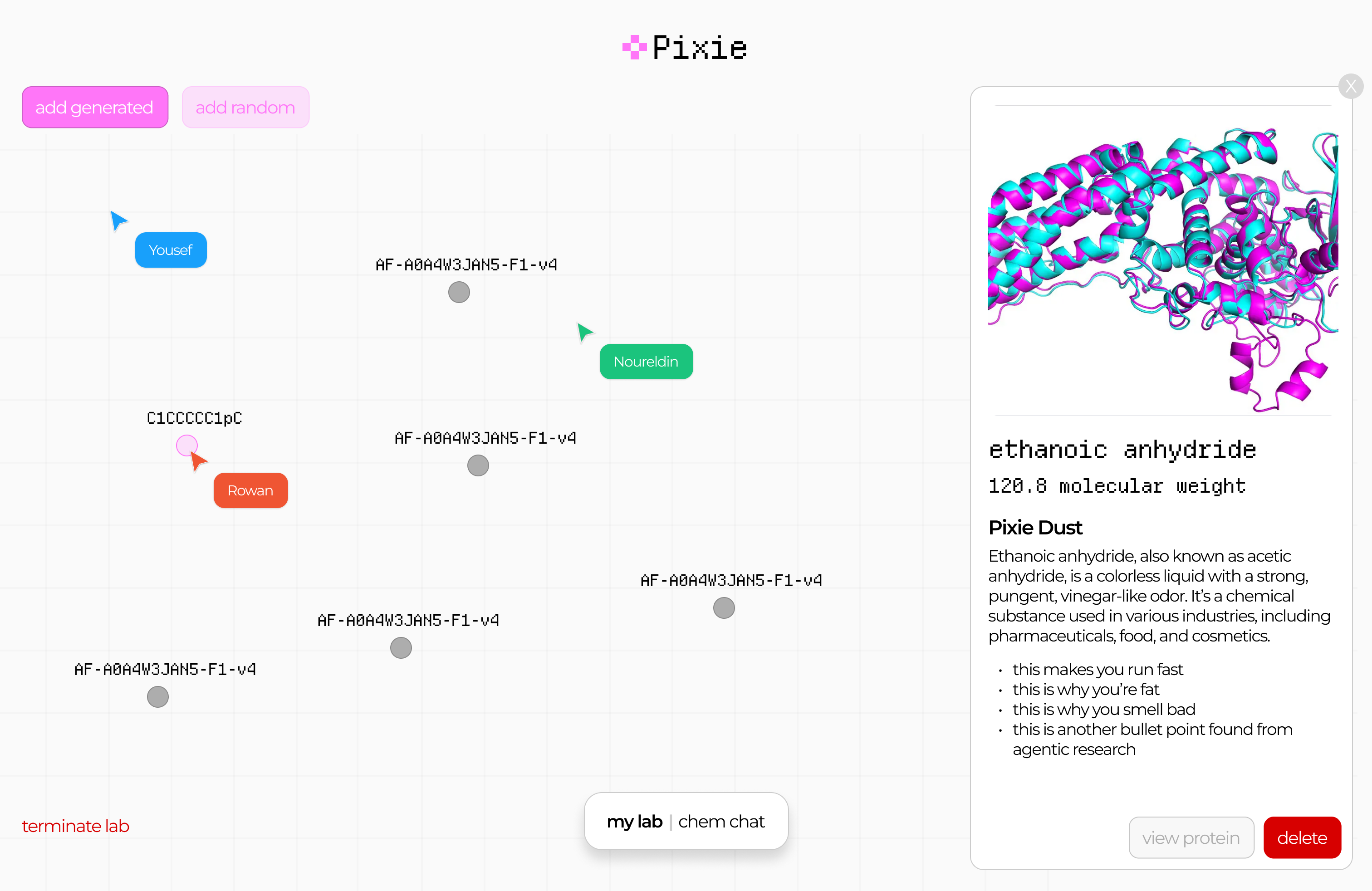

Pixie: Entry to Mistral x a16z Hackathon

This post documents my participation in a hackathon 2 weeks ago. I started writing this a week ago and finished it now, so it's a little mixed. Scroll to the bottom for the final result!

Rowan reached out asking if I'm going to the hackathon, and I wasn't sure which one he meant. Although I submitted a project to the recent Gemini hackathon, it was more of an excuse to migrate an expensive GPT-based project ($100s per month) to Gemini (worse, but I had free credits). I never really join these things to actually compete, and there was no chance a project like that could win anyway. What's the opposite of a shoo-in? A shoo-out?

So it turns out Rowan meant the Mistral x a16z hackathon. This was more of a traditional "weekend crunch" type of hackathon. I felt like my days of pizza and redbull all-nighters are long in the past by at least a decade, but I thought it might be fun to check out that scene and work on something with Rowan and Nour. It also looked like a lot of people I know are going. So we applied and got accepted. It seems like this is another one where they only let seasoned developers in, as some of my less technical friends told me they did not get in.

Anyway, we rock up there with zero clue on what to build. Rowan wants to win, so is strategising on how we can optimise by the judging criteria, researching the background of the judges (that we know about), and using the sponsors' tech. I nabbed us a really nice corner in the "Monitor Room" and we spent a bit of time snacking and chatting to people. The monitors weren't that great for working (TV monitors, awful latency) but the area was nice.

Since my backup was a pair of XReal glasses, a lot of people approached me out of curiosity, and I spent a lot of time chatting about the ergonomics of it, instead of hacking. I also ran into friends I didn't know would be there: Martin (Krew CTO and winner of the Anthropic hackathon) was there, but not working on anything, just chilling. He intro'd me to some other people. Rod (AI professor / influencer, Cura advisor) was also there to document and filmed us with a small, really intriguing looking camera.

We eventually landed on gamifying drug discovery. I should caveat that what we planned to build (and eventually built) has very little scientific merit, but that's the goal of a hackathon; you're building for the sake of building and learning new tools etc. Roughly-speaking, we split up the work as follows: I built the model and some endpoints (and integrated a lib for visualising molecules), Nour did the heavy-lifting on frontend and deploying, and Rowan did product design, demo video, pitch, anything involving comms (including getting us SSH access to a server with a nice and roomy H100), and also integrated the Brave API to get info about a molecule (Brave was one of the sponsors).

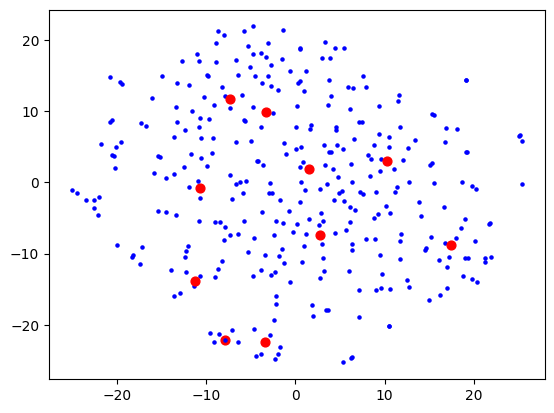

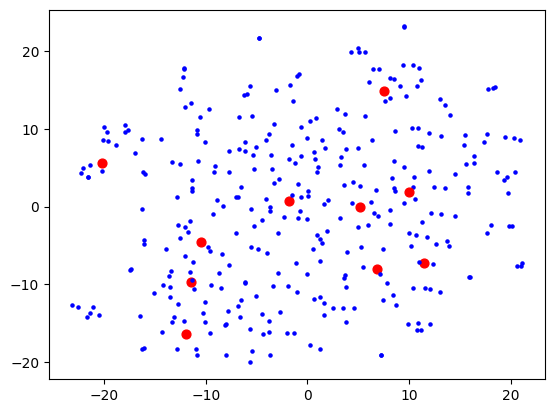

You're probably wondering what we actually built though. Well, after some false starts around looking at protein folding, I did some quick research on drug discovery (aided by some coincidental prior knowledge I had about this space). There's a string format for representing molecules called SMILES which is pretty human-readable and pervasive. I downloaded a dataset of 16k drug molecules, and bodged together a model that will generate new ones. It's just multi-shot (no fine-tuning, even though the judges might have been looking for that, as I was pretty certain that would not improve the model at all) and some checking afterwards that the molecule is physically possible (I try 10 times to generate one per call, which is usually overkill). I also get some metadata from a government API about molecules.

On the H100, I ran one of those stable diffusion LORAs. It takes a diagram of a molecule (easy to generate from a SMILES string) and then does img2img on it. No prompt, so it usually dreams up a picture of glasses or jewellery. We thought this was kind of interesting, so left it in. We could sort of justify it as a mnemonic device for education.

Finally, I added another endpoint that takes two molecules and creates a new one out of the combination of the two. This was for the "synthesise" journey, and was inspired by those games where you combine elements to form new things.

Throughout the hackathon, we communicated over Discord, even though on the Saturday, Rowan and I sat next to each other. Nour was in Coventry throughout. It was actually easier to talk over Discord, even with Rowan, as it was a bit noisy there. Towards the end of Saturday, my age started to show and I decided to sleep in my own bed at home. Rowan stayed at the hackathon place, but we did do some work late into the night together remotely, while Nour was offline. The next day, I was quite busy, so Rowan and Nour tied up the rest of the work, while I only did some minor support with deployment (literally via SSH on my phone as I was out).

Finally, Rowan submitted our entry before getting some well-deserved rest. He put up some links to everything here, including the demo video.

Not long after the contest was over, they killed the H100 machines and seem to have invalidated some of the API keys also, so it looks like the app is also not quite working anymore, but overall it was quite fun! We did not end up winning (unsurprisingly) but I feel like I've achieved my own goals. Rowan and Nour are very driven builders who I enjoyed working with, and CodeNode is a great venue for this sort of thing. The next week, Rowan came over to my office to co-work and also dropped off some hackathon merch. I ended up passing on the merch to other people, as I felt a bit like I might be past my hackathon years now!

Homemade lip balm

I dream of closed, self-sufficient systems. A few weeks ago, I visited the London Permaculture Festival as I'm very, very interested in permaculture. There, Hendon Soap had a stand. They were selling a DIY Lip Balm Kit.

I use lip balm regularly, so it occurred to me I ought to learn about how it's made, and maybe make my own in the long run! Today I tried my hand at making it. It's not as easy as it looks! In theory you toss everything into a sauce pan, put in 60g of oil (I used vegetable oil), and once everything is melted, you put it in the tins and put those in the freezer as fast as possible so they cool rapidly (this is to avoid a grainy texture). In theory you can also add some edible essential oils if you want a scent.

Long story short: I made a huge mess. And the stuff is quite difficult to clean. I managed to do one tin approximately right.

The rest I was mostly able to salvage and store in a little jar. When I finish that first tin, I'll re-melt that and maybe this time use a little pot with a pouring tip to avoid spilling, as well as some kind of tool for moving the very hot tins into the freezer properly! It was so hot it melted the ice in my freezer away entirely, creating a nice little indentation to hold it.

Please don't judge the ungodly amount of ice cream -- there's a heatwave coming!

The hills we live on

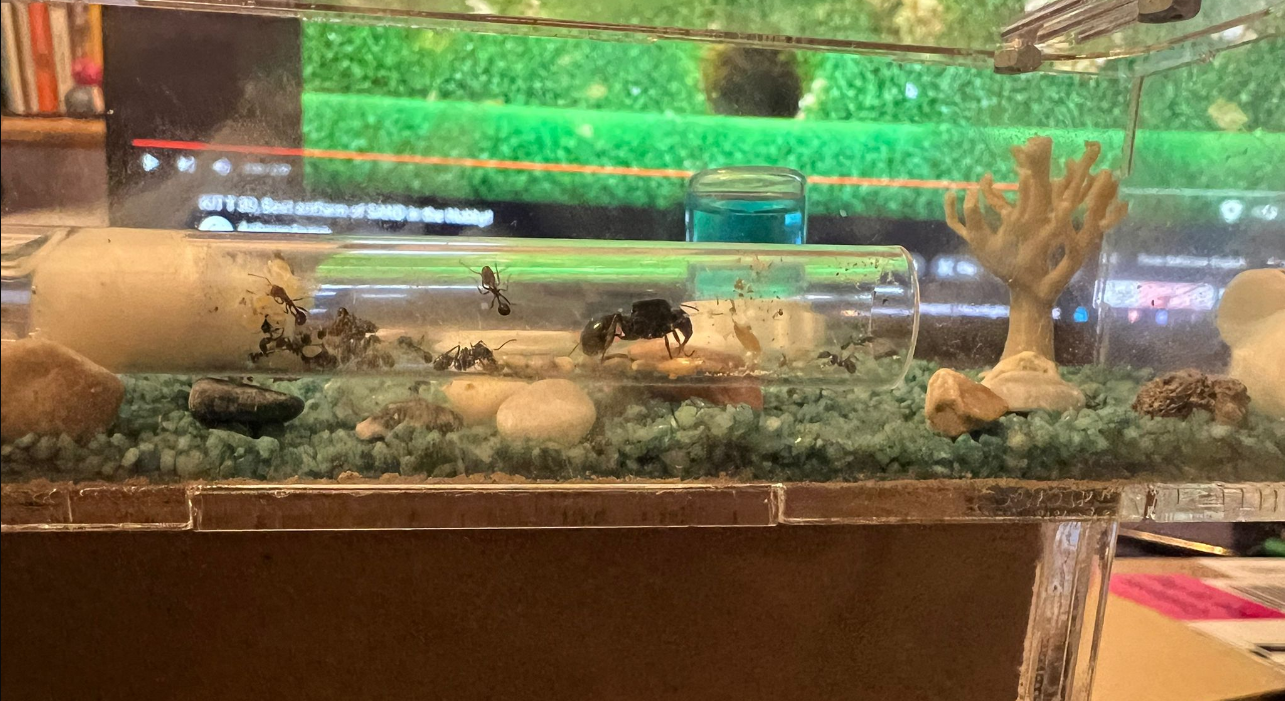

Just wanted to post a quick update on the ant farm. They're thriving and having all sorts of adventures!

Rising from the ashes

It's frightening how close I keep getting to killing my ficus -- they're supposed to be hardy! An update is long overdue.

First off, I moved the UV LEDs into an IKEA greenhouse. This made things much tidier and I have room for the upcoming project. The ficus had some strong growth up and to the left, all but touching the glass.

I also got these wooden drawers that you can kind of see in the back, with each one dedicated to a different project. The bottom one (taking up the whole width) is the horticulture one as that needs the most space.

Early May, I noticed that the leaves were getting brown. I thought it might be because the humidity is too high, so I thought I'd air it our a bit. Overnight it shrivelled up completely.

I was heartbroken and went to /r/Bonsai for advice. The only response I got was "it dried out and died". I did my best to try and get the humidity right and crossed my fingers. Almost two weeks later, I was delighted to see that it was still alive and had sprouted a new leaf! Can you see it?

And now, two months later, look at all this foliage!

I do plan to remove the dead leaves, but I wanted to wait for it to be a little stronger before I remove the glass again and whack things out of balance.

The hills we die on

This is a post I wrote a while ago, and recently decided may be worth publishing. It's a combination of anecdotal retelling and reflection. At the time, I had been reflecting on a paradox in my behaviour. I often go to extreme lengths to enact justice, sometimes with full knowledge that doing so is not worth it.

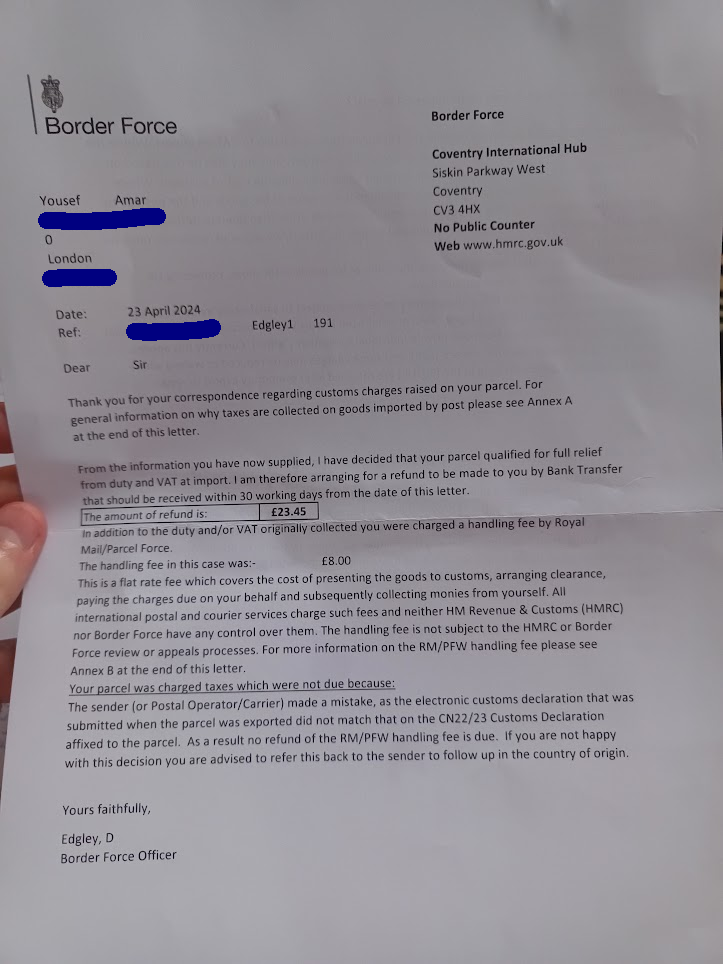

Most recently, I ordered something off of Etsy from Spain and I paid sales tax. The total value was less than £135, so I don't have to pay import tax. So I was surprised to find that my parcel was held at customs pending a payment of gift tax. Gift tax applies to gifts over £39, but of course this was not a gift. So HMRC wants £23.45 import VAT plus an £8 handling fee, which I had to pay to get the parcel no matter what.

The only way to dispute this sort of thing is to pay, get the parcel, then fill in a form, send that and the parcel label to Border Force (Coventry) by snail mail, wait for a human to review it, and finally they might decide that you should get a refund, or they might not. Things like this really get under my skin. Part of the reason I don't return to Germany is to escape this exact kind of ridiculous bureaucracy.

When things like this happen, I'm unable to let them go. The knowledge that there's something wrong in the world will live in my head rent-free, like an OCD urge, until I can resolve it. I need to actively prevent myself from getting too involved with other people's injustices (and I don't follow world news) or else I will feel like this all the time. However, when I have personally been wronged in some way, it's in my face and I can't let it go.

Now obviously, if you consider the Lime bike I rented to get to the post office, the cost of the padded brown envelope and postage, not to mention the time I spent researching the law and queuing in the post office, it's not worth the potential money I could recover from Border Force. But if I didn't do this, it would weigh on me probably forever. Glad to report that I got the refund at least!

The silliest thing I've had this itch for: my local dentist owing me £5 from when their card machine was broken and I paid cash but they had no change. I had moved away and it was not worth the trip to Fulham for them to give it back to me. I was eventually able to let it go when they promised to donate it to a specific charity. Whether they did or not, I don't care, I could wash my hands of it on the back of their promise, and erase the "weight" of that injustice from my mind.

The most serious thing I've had this itch for: recovering the deposit of a flat I once lived in. Long story short, I filed an N208 claim to take my landlord to court for the maximum legal penalty of 3x the deposit amount for this particular offence. From there, things escalated very rapidly and I negotiated an out of court settlement with the director of his agency for my full deposit (and my brother's) plus court fees. Here too I was extremely annoyed at how difficult it is to do this sort of thing in the UK, and that I needed to physically go to a specific, hard-to-reach county court to file my big binder of post-it-laced documents (painstakingly printed at my nearest library) as evidence. Trees had to die and the printer at my local library had to waste ink!

I remember struggling to decide if I should even bother negotiating at that stage, or should instead drag them to court out of spite. I felt like the lengths I went to very few others would have the freedom or ability to (financial or otherwise), so I had some kind of duty to teach them a lesson so they can think twice before they decide to mess with a poor clueless student in the future. I wasted hours queuing in the rain at those free first-come-first-serve legal clinics to make sure I did things right.

Is this some kind of hero complex? Did I grow up watching too much Disney? Having held the metaphorical knife to their throat, I was able to release myself from this duty through the settlement, and erase the weight from my mind. But why did that weight persist for so long?

I have many examples like this, but perhaps the last one I'll mention for now is another David vs Goliath tale, starring me as David again, and British Gas as Goliath. What's notable about this is that it's a whole system/institution rather than specific incompetent/malicious individuals. This was another long saga that started when British Gas incorrectly classified my flat as a business, and had me on commercial rates rather than domestic rates.

There was never an individual person I could direct my rage at. British Gas is a giant lumbering machine inadvertently squishing things, the cogs in the machine unaware of its emergent behaviour. I did yell at some of the thickest of cogs -- British Gas employees and debt collector agents -- but overall, would you be angry at a transistor for a bug in your program?

The Energy Ombudsman eventually made them fix everything and also write me an apology letter. At the time this felt like vindication. I was ready to frame the letter; another notch on my belt in the struggle for justice. Who exactly did I beat though? I don't know the lady that signed the apology letter. I just looked her up on LinkedIn. She seems like a nice person. British Gas lumbers on.

There's not much of a conclusion to this post. I suppose it's a recognition of my sometimes irrational stubbornness. Sometimes I just can't let things go. My anger and frustration seems to spike around broken systems, rather than the actors that take advantage of those systems (the court rather than the landlord). I would love to build systems with few cogs that don't squish things.

The direction of this blog

I just got back[1] from a stretch of travel (Germany, Greece), and thought that I would do some writing while I'm there. I didn't in the end. It seems like there are mainly two points of friction for me:

- My authoring tools/workflows still aren't that great, to where writing feels like a hassle

- I'm too concerned about privacy and general opsec, where "principle of least knowledge" clashes with writing openly for the public

I spend a lot of time communicating with people within my chat client. There are a lot of people I need to keep up with, so I've become quite efficient at it. It makes sense to incorporate writing blog posts into the same workflows. I intend to have Sentinel be my post poster. Maybe I write a message, tag it with some emoji reactions, and Sentinel takes it from there.

I did actually do quite a bit of writing since my last post, but for the second reason, I decided not to publish any of that. I might try to do so, but part of me would rather go fully stealth and cut off online presence where I can, and limit it where I can't (e.g. for work).

I wonder if there's a way to use LLMs to build "unit tests" for different personas reading my blog, every time I'm about to publish something. It could also try and make inferences from posts to mitigate against any future personas that I cannot yet predict. Maybe a tool like this could be useful for people who are not very politically well-versed and want make sure they don't make strong claims that could have them in hot water later.

For now maybe I'll simply go down my unpublished posts and reconsider publishing things I've already written. It helps me to write down complex thoughts to provide them with structure, and communicate them to others without rambling or getting lost. I do that over text too unfortunately, but even more when speaking! There is one post I plan to write like that soon.

As a rule of thumb, when I repeat myself more than twice on a topic (e.g. when explaining something to someone), I decide to write a post about it, as that's enough evidence for me that other people might benefit from it, and indeed I can send the next person to ask me the same question the post I have already written. There's a post like that coming soon too.

At least for those two types of posts I don't need to overthink much. I've also decided to start writing an autobiography, at different levels of granularity. I do not intend to publish this (maybe only individual anecdotes), but I think it will help me put my life in perspective and reflect on it. I tend to easily forget events as well, so it will help me remember.

I've also recently finished a huge migration from AWS to GCP. AWS has been my workhorse for over a decade, but has recently gotten too expensive, as the last of my AWS startup credits dried up. I have since founded another company that would be eligible for credits, but I can't redeem them on the same account either way, so a migration was inevitable. Why GCP? I have $350k of credits on there for the next two years. This website now lives on GCP too, which feels like a new beginning.

That's a lie; I got back around 2 weeks ago, wrote most of this post, didn't publish it. ↩︎

Rise of the messor ants

A few days ago I turned 31. Birthdays are not something I look forward to. However, Veronica gifted me an ant farm kit, which is exciting. I have always been fascinated by ants and the emergent behaviour of ant colonies, but never had the chance to observe that IRL through glass panes. In our flat in Egypt, ants were very common, so I would sometimes intentionally leave a bit of bread out on the kitchen counter just to watch them slowly take it apart and carry crumbs in a line back into the walls from whence they came.

When I was little, I also remember seeing ant farms that use a special type of transparent gel rather than sand, so you could see everything the ants were doing. I think I saw it on ThinkGeek, a now defunct online store, and wishing I could have it.

Well, today is the day that I can say I have a proper ant farm right here in my room!

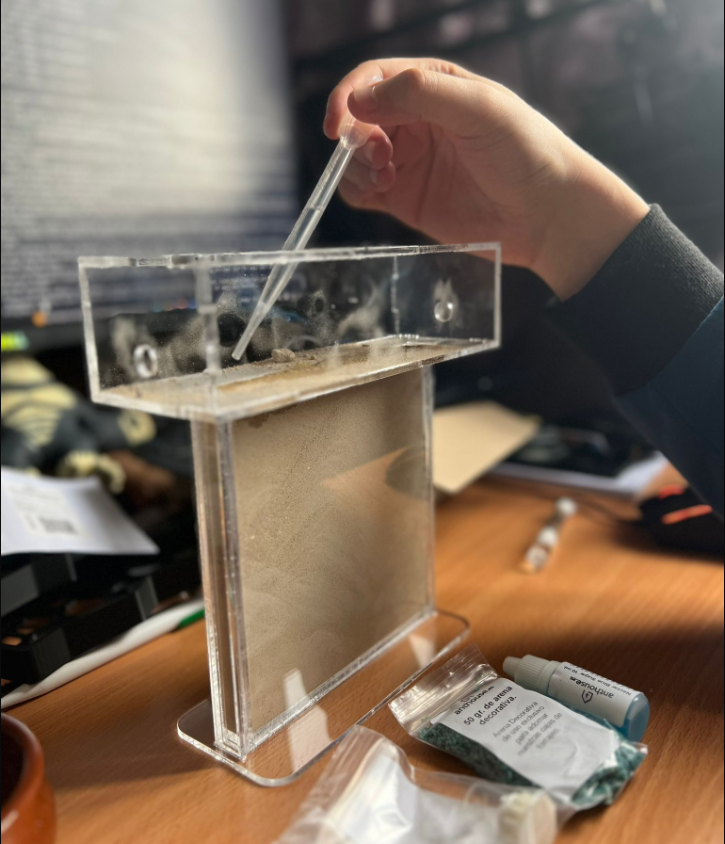

The kit was surprisingly compact.

The fledgling colony made the long trip from Spain in a little test tube. Her majesty, Queen Gina, and her children, had water soaking in through the cotton on the left, some seeds as snacks while travelling, and took care of the larvae in the back of their little temporary home.

Before they could move in to their new home, I first had to make it nice and cosy for them. I started off by slowly soaking the sand over the course of the day, then decorating the top of their home. I also added their little sugar water dispenser and seed bowl. Finally, I dug a hole for them in the centre as a starting point.

I then set them free on their new home, but they stayed in the test tube for a while to build up the courage. One little brave ant ventured out, but was bringing back blue pebbles and clogging up the test tube once more. She really loved her pebbles. I later read that this is normal behaviour in order to make the queen feel more comfortable.

The moment I stopped watching they very quickly moved into their new home. I came to find the test tube empty and removed it. They had started digging and also moving the sesame seeds that Veronica had left them.